Resources

Resources

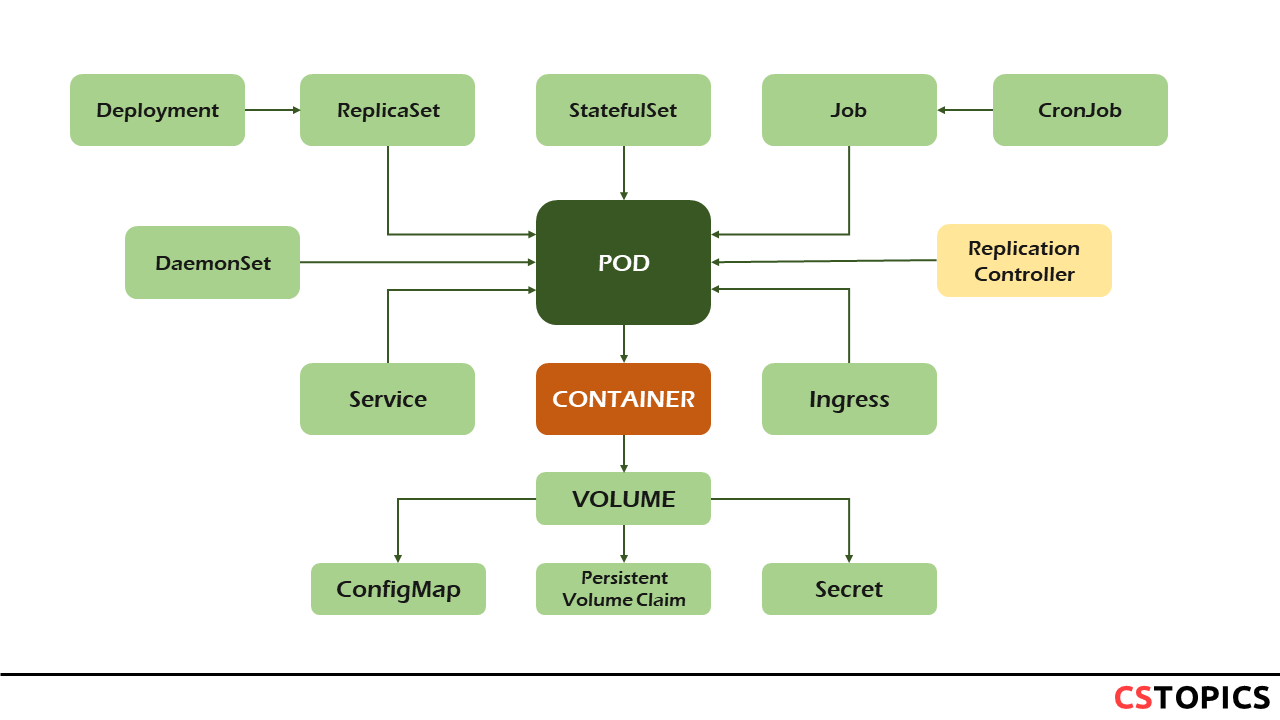

This is a high-level overview of the basic types of resources provide by the Kubernetes API and their primary functions. * Workloads are objects you use to manage and run your containers on the cluster. * Services & Load Balancing resources are objects you use to "stitch" your workloads together into an externally accessible, load-balanced Service. * Config & Storage resources are objects you use to inject initialization data into your applications, and to persist data that is external to your container. * Cluster resources objects define how the cluster itself is configured; these are typically used only by cluster operators. * Metadata resources are objects you use to configure the behavior of other resources within the cluster, such as HorizontalPodAutoscaler for scaling workloads.

Pods

Pods are the smallest deployable units of computing that you can create and manage in Kubernetes.

A Pod (as in a pod of whales or pea pod) is a group of one or more containers, with shared storage/network resources, and a specification for how to run the containers. A Pod's contents are always co-located and co-scheduled, and run in a shared context. A Pod models an application-specific "logical host": it contains one or more application containers which are relatively tightly coupled. In non-cloud contexts, applications executed on the same physical or virtual machine are analogous to cloud applications executed on the same logical host.

The shared context of a Pod is a set of Linux namespaces, cgroups, and potentially other facets of isolation - the same things that isolate a Docker container. Within a Pod's context, the individual applications may have further sub-isolations applied.

In terms of Docker concepts, a Pod is similar to a group of Docker containers with shared namespaces and shared filesystem volumes.

Using Pods

Usually you don't need to create Pods directly, even singleton Pods. Instead, create them using workload resources such as Deployment or Job. If your Pods need to track state, consider the StatefulSet resource.

Pods in a Kubernetes cluster are used in two main ways:

- Pods that run a single container. The "one-container-per-Pod" model is the most common Kubernetes use case; in this case, you can think of a Pod as a wrapper around a single container; Kubernetes manages Pods rather than managing the containers directly.

- Pods that run multiple containers that need to work together. A Pod can encapsulate an application composed of multiple co-located containers that are tightly coupled and need to share resources. These co-located containers form a single cohesive unit of service—for example, one container serving data stored in a shared volume to the public, while a separate sidecar container refreshes or updates those files. The Pod wraps these containers, storage resources, and an ephemeral network identity together as a single unit.

Grouping multiple co-located and co-managed containers in a single Pod is a relatively advanced use case. You should use this pattern only in specific instances in which your containers are tightly coupled.

Workloads

A workload is an application running on Kubernetes. Whether your workload is a single component or several that work together, on Kubernetes you run it inside a set of Pods. In Kubernetes, a Pod represents a set of running containers on your cluster.

A Pod has a defined lifecycle. For example, once a Pod is running in your cluster then a critical failure on the node where that Pod is running means that all the Pods on that node fail. Kubernetes treats that level of failure as final: you would need to create a new Pod even if the node later recovers.

However, to make life considerably easier, you don't need to manage each Pod directly. Instead, you can use workload resources that manage a set of Pods on your behalf. These resources configure controllers that make sure the right number of the right kind of Pod are running, to match the state you specified.

Those workload resources include:

- Deployment and ReplicaSet (replacing the legacy resource ReplicationController)

- StatefulSet

- DaemonSet for running Pods that provide node-local facilities, such as a storage driver or network plugin

- Job and CronJob for tasks that run to completion

Exercise

deploy the node.js application from the docker-container-lifecycle example using the follwoing two object yaml files:

nodejs-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: nodejs-app

labels:

run: nodejs-app

spec:

containers:

- name: nodejs

image: hellojs

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

nodejs-srv.yaml

apiVersion: v1

kind: Service

metadata:

name: nodejs-svc

spec:

selector:

run: nodejs-app

ports:

- protocol: TCP

port: 8080

targetPort: 8080

$ kubectl apply -f nodejs-pod.yaml

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

nodejs-app 1/1 Running 0 25s

$ kubectl apply -f nodejs-srv.yaml

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 7h25m

nodejs-svc ClusterIP 10.107.19.196 <none> 8080/TCP 25m

$ kubectl proxy

http://127.0.0.1:8001/api/v1/namespaces/default/services/nodejs-svc/proxy/