Image Processing

Image Processing can be defined as the manipulation and analysis of images to enhance their quality, extract relevant information, or interpret their content. It encompasses a diverse range of tasks, from basic operations like resizing and color correction to complex operations like object recognition and scene understanding. Image Processing techniques are employed to clean noisy images, detect and track objects, extract features, and perform various other operations critical for computer vision applications.

Image Representation

Image processing hinges upon the concept of image representation. Image representation involves how visual data, in the form of images, is structured and encoded digitally for manipulation and analysis. Understanding image representation is pivotal for unlocking the potential of image processing techniques. Here, we delve into the intricacies of image representation in this context.

Image representation forms the bedrock of image processing. It defines how visual information is structured, stored, and manipulated. The choice of representation depends on the specific application, the desired level of detail, and the available resources. A nuanced understanding of image representation is essential for anyone working with digital images, whether it be for basic editing, complex computer vision tasks, or artistic endeavors.

Pixels

The input device, such as a camera or scanner, performs the vital task of converting the incoming image into a digital format. This digital data is commonly organized into a matrix structure. Within this matrix, each element serves as a representation of a pixel, a fundamental unit of the image. Each pixel is encoded as a numerical value, which serves a dual purpose: it indicates the pixel's brightness and its color properties.

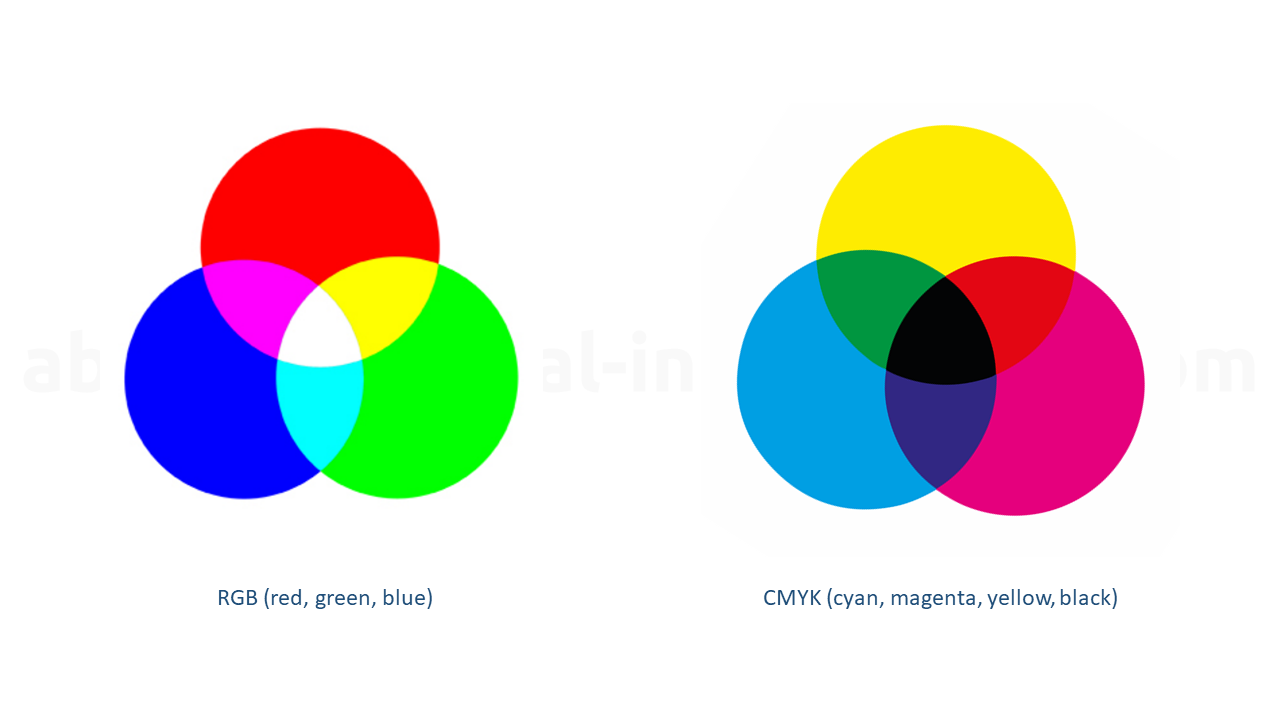

For color images, pixels are typically expressed using a combination of three or four numerical values, corresponding to two primary color models: RGB (Red, Green, and Blue) and CMYK (Cyan, Magenta, Yellow, and Black). In the RGB model, each value represents the intensity of the respective color channel, resulting in a broad spectrum of colors. On the other hand, CMYK is commonly used for color printing and represents colors through the combination of cyan, magenta, yellow, and black inks.

In grayscale images, simplicity prevails. Here, each pixel is conveyed by a single numerical value, elucidating its brightness level. This straightforward representation simplifies the image to shades of gray, with higher values indicating brighter areas and lower values corresponding to darker regions.

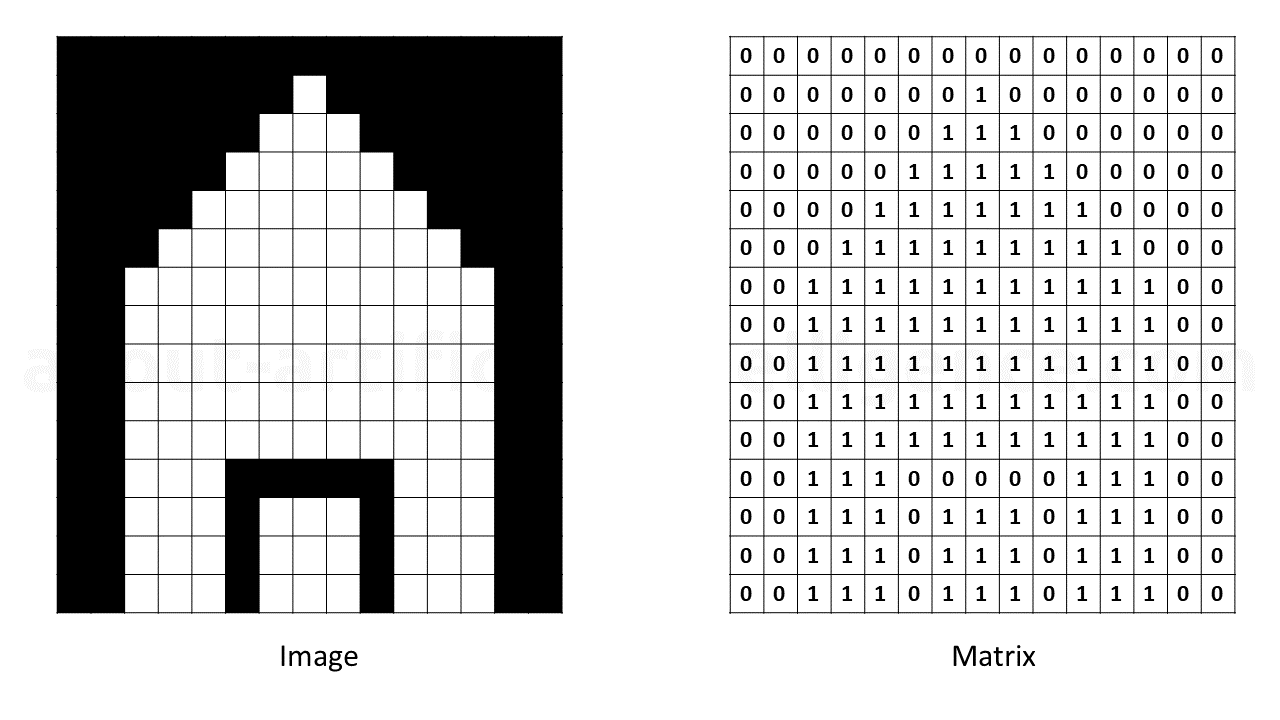

In its simplest form, images with only two colors, typically black and white, are represented with just two values. Each pixel in such binary images is encoded with two values, reflecting either black or white. matrix-representation-of-a-black-white-image provides a visual demonstration of this uncomplicated representation by depicting the matrix structure of an illustrative black and white image.

Resolution and Size

The resolution of an image determines the number of pixels it contains. High-resolution images have more pixels, providing finer detail but requiring more storage and processing power. Low-resolution images have fewer pixels, which results in a coarser representation but require less storage and processing capacity. Resolution is typically denoted as width x height (e.g., 1920x1080 for Full HD).

The resolution of an image is a critical aspect of its representation. It directly influences the level of detail captured in the image and has implications for storage, processing power, and the overall user experience.

High-Resolution Images

High-resolution images are characterized by a dense arrangement of pixels. In these images, there are numerous pixels packed into every inch of the image. As a result, high-resolution images capture intricate details, sharp edges, and a broad spectrum of colors. These images are favored when precision and clarity are paramount, such as in photography, medical imaging, or high-definition television.

However, the richness of high-resolution images comes at a cost. They demand substantial storage space due to the large number of pixels, which can quickly accumulate into sizable files. Processing high-resolution images also necessitates more computational power and memory, making them resource- intensive for applications like real-time image analysis or video processing.

Low-Resolution Images

Conversely, low-resolution images comprise a sparser distribution of pixels. There are fewer pixels per inch, resulting in a coarser representation. Low-resolution images are characterized by a reduction in fine details and smooth transitions between colors or shades. While they occupy significantly less storage space and demand fewer computational resources, they may lack the level of fidelity required for certain tasks.

Resolution Notation

Resolution is typically denoted as the product of the image's width and height, expressed in pixels. For instance, the notation "1920x1080" signifies a resolution of 1920 pixels in width and 1080 pixels in height. This resolution corresponds to the Full HD standard, a common format in high- definition displays. Other common resolutions include 1280x720 (HD), 3840x2160 (4K Ultra HD), and 7680x4320 (8K Ultra HD), each offering varying levels of detail and clarity.

The choice of image resolution depends on the specific requirements of the task at hand. High- resolution images excel in scenarios where detail and precision are paramount, whereas low- resolution images are favored when conserving storage and computational resources takes precedence. As technology advances, higher-resolution displays and imaging devices continue to redefine our standards of visual clarity and redefine what is achievable with image representation.

Color Spaces

In the realm of image processing, the representation of colors plays a pivotal role, and various color spaces have been devised to suit specific applications. Among these, the most prevalent is RGB, which represents colors using red, green, and blue channels. Additional color spaces like CMYK (utilized in printing), HSV (defined by hue, saturation, and value), and YUV (which separates luminance and chrominance) are tailored for specialized tasks, such as color manipulation and correction.

Both RGB and CMYK serve as color schemes for mixing digital image colors. RGB, standing for red, green, and blue, comprises the primary colors in this model. Each color component is represented by 1 byte, allowing values to range from 0 to 255. Consequently, each pixel in an RGB image is represented by 3 bytes. This amalgamation of three colors, each with 256 possible tones, results in a staggering 16.8 million potential color tones. Images employing 3 bytes per pixel are labeled as 24-bit true color images. An alternative approach employs 8 bits, or 1 byte, to represent all three colors per pixel, conserving memory. In this case, the maximum number of colors, with 1 byte per pixel, is 256. Color quantization is a technique that converts a 24-bit true color image into an 8-bit image. This process involves creating a color map for an image with reduced color density based on an image with a higher color density. A basic form of quantization assigns 3 bits to red, 3 bits to green, and 2 bits to blue, reflecting the human eye's relative insensitivity to blue light.

RGB - The Additive Color Model

RGB is grounded in the physical primary colors: red, green, and blue. These colors are fundamental in the digital domain, forming the basis for all digital representations, including images displayed on screens and digital photographs. RGB operates on the principle of additive color mixing, meaning that as more color is added, the image becomes brighter. A 100% application of all three primary colors results in white.

CMYK - The Subtractive Color Model

In contrast to RGB, the CMYK model is subtractive. It consists of three colors: cyan, magenta, yellow, and the key color, black. These colors are applied to a white background, and the more color is applied, the darker the result. The key color, black, is essential because a full application of cyan, magenta, and yellow does not produce true black but rather a dark brown. CMYK is the standard color mode for offset printing, as well as for home printers and the online printing industry. The colors are applied sequentially, with the mixing ratio producing a vast array of color tones. Theoretically, CMYK can represent over 4 billion colors, but in practice, far fewer can be displayed on screens or printed. Notably, the CMYK color space is smaller than the RGB color space.

rgb-vs-cmyk shows the color combination of RGB and CMYK model.

In short, the RGB color model finds its strength in digital image processing, while CMYK is predominantly employed for printed materials, making the choice between the two dependent on the medium and the intended use.

Digital Image Formats

When it comes to digital imagery, the format in which images are stored plays a pivotal role in determining how they are organized, shared, and experienced. A range of image formats exists, each defining the structure of pixel data, metadata, and, where applicable, compression techniques within the file. Notable image formats in this diverse landscape encompass JPEG, PNG, GIF, BMP, and TIFF.

Consider JPEG, a widely embraced format notable for its capacity to reduce file size through lossy compression. While this compression effectively trims file size, it comes at the cost of sacrificing a degree of image quality. On the opposite end of the spectrum is PNG, a format celebrated for its use of lossless compression, which meticulously conserves image fidelity.

The choice of image format carries significant consequences, extending beyond mere technicalities. Each format has been meticulously tailored with specific attributes, traits, and application scenarios in mind. Consequently, the selection of the right format holds utmost importance, as it has the power to shape how visual data is archived, shared, and presented.

Let's delve deeper into the distinguishing features of some prevalent digital image formats to appreciate their unique roles in the digital imagery landscape.

JPEG (Joint Photographic Experts Group)

-

Compression: JPEG employs lossy compression, which means it reduces file size by selectively discarding some image data. This compression results in smaller file sizes but may lead to a loss of image quality, particularly in highly compressed images.

-

Use Cases: JPEG is ideal for photographs and images with gradients, as it provides good compression while maintaining acceptable visual quality. It is the most widely used format for web images and digital photography.

PNG (Portable Network Graphics)

-

Compression: PNG uses lossless compression, preserving image quality without sacrificing detail. It's particularly efficient at compressing images with large areas of solid color.

-

Transparency: PNG supports transparent backgrounds, making it suitable for images with variable levels of opacity, such as logos and icons.

-

Use Cases: PNG is commonly used for images requiring high quality, transparency, or images with sharp edges, like logos, icons, and graphics. It is also favored for web graphics and when precise reproduction of visual elements is paramount.

GIF (Graphics Interchange Format)

-

Color Palette: GIF images use a limited color palette (up to 256 colors), which is advantageous for simple graphics and animations.

-

Animation: GIF supports simple animations by displaying a sequence of images in rapid succession.

-

Use Cases: GIFs are popular for small animations, memes, and simple graphics. They are often used for web banners and avatars due to their small file sizes.

BMP (Bitmap)

-

Compression: BMP is typically uncompressed, resulting in large file sizes. This format retains all image data without any loss.

-

Use Cases: BMP is used when absolute image fidelity is required, such as in medical imaging, where every pixel must accurately represent data. It's less common for web and general-purpose images due to its file size.

TIFF (Tagged Image File Format)

-

Compression: TIFF supports both lossless and lossy compression options, making it versatile for various applications.

-

Bit Depth: TIFF can store images with high bit depth, allowing for the preservation of extensive color information.

-

Use Cases: TIFF is favored in professional photography, print media, and industries requiring precise image representation, like geospatial imaging and medical imaging. It is also used as an archival format for preserving high-quality images.

The choice of image format should align with the specific requirements of the task at hand. Consider factors such as image quality, file size constraints, transparency needs, and compatibility with the intended platform or application. By selecting the appropriate format, you can ensure that your digital images are both visually appealing and optimized for their intended purpose.

Image Metadata

Images often contain metadata, which is additional information about the image, such as date and time of creation, camera settings, and geolocation. This metadata can be critical for various applications, including forensic analysis and data management.

Image Metadata enriches our understanding of images by providing crucial contextual details that transcend mere visual perception. These are the main types of image metadata:

-

Date and Time: Metadata often includes the date and time when the image was created or captured. This timestamp can be instrumental for sorting, organizing, and understanding the chronological context of images.

-

Camera Settings: Information about the camera's settings at the time of capture is invaluable for photographers and enthusiasts. Details such as shutter speed, aperture, ISO, and focal length offer insights into the technical aspects of image creation.

-

Geolocation: Geolocation metadata, also known as geotagging, provides geographic coordinates (latitude and longitude) of the image's capture location. This data is a game-changer for applications like travel photography, mapping, and navigation.

-

Device Information: Metadata may reveal the make and model of the camera or device used to capture the image, shedding light on the equipment's capabilities and characteristics.

-

Copyright and Licensing: Metadata can include copyright information and licensing terms, allowing creators to protect their intellectual property rights and specify how others can use the image.

-

Keywords and Tags: Descriptive keywords and tags enable efficient image categorization and search. These annotations can be manually added or automatically generated by image management software.

Image metadata serves a multitude of essential purposes across various domains. In the realm of forensics, metadata acts as a digital detective, offering crucial insights into the authenticity and origins of images, thus playing a pivotal role in criminal investigations and legal proceedings. Simultaneously, metadata becomes the archivist's trusted ally, ensuring the efficient organization and preservation of extensive image collections in both personal photo libraries and corporate databases.

Businesses find metadata indispensable for streamlined data management, facilitating the efficient retrieval and utilization of images in marketing, product catalogs, and brand management. For scientists and researchers, metadata becomes a scientific chronicler, meticulously documenting experimental conditions and contextual details related to scientific imaging. In the digital world, metadata enhances the user experience in online image galleries and libraries, allowing for precise search and categorization, ensuring users swiftly locate the images they seek. Furthermore, metadata takes on the role of a cultural steward, preserving historical and cultural contexts within archival images, thus contributing to the safeguarding of our cultural heritage and historical records. However, it is essential to exercise caution, as metadata can inadvertently disclose sensitive information, such as the location of personal photographs, raising critical privacy concerns that necessitate awareness and protection measures.

Image metadata is an often-overlooked yet invaluable component of digital images. It provides the rich tapestry of context and information that enhances our appreciation and understanding of visual data. Whether for personal organization, professional applications, or legal investigations, metadata plays an indispensable role in the world of digital imagery.

Vector Graphics vs. Raster Images

While raster images, composed of pixels, dominate the digital image landscape, another formidable contender exists in the form of vector graphics. These two distinct approaches to representing visual content offer unique attributes, each tailored to specific tasks, preferences, and creative endeavors.

Raster Images (Pixel-Based)

Raster images, also known as bitmap images, rely on a grid of pixels to convey visual information. Each pixel carries a specific color or shade, collectively forming the image when viewed from a distance. However, as raster images are resolution-dependent, resizing them can result in pixelation and loss of detail.

These images excel at capturing the nuances of photographs and complex scenes, making them well- suited for digital photography, web graphics, and realistic digital art. They shine in scenarios where the richness of detail is paramount, offering intricate textures, shading, and color variations.

Vector Graphics (Mathematical-Based)

Vector graphics, in contrast, are constructed using mathematical equations that define shapes, lines, and curves. Rather than relying on pixels, vector graphics remain resolution-independent. This unique feature means they can be scaled up or down indefinitely without any loss of quality, making them ideal for tasks that demand precision and versatility.

Vector graphics find their forte in tasks such as logo design, illustration, typography, and graphic design. They excel at producing crisp, clean, and infinitely scalable visuals, where precise lines, shapes, and text are essential. Common file formats for vector graphics include SVG (Scalable Vector Graphics), AI (Adobe Illustrator), and EPS (Encapsulated PostScript).

Comparing Use Cases

-

Raster Images: Raster images are the go-to choice for projects that require lifelike renderings, such as photographs, digital paintings, and textures in 3D modeling. Their rich detail and ability to capture complex scenes make them indispensable in these contexts.

-

Vector Graphics: Vector graphics shine when precision is paramount. They are preferred for tasks like logo creation, where the logo may need to appear on various platforms and in various sizes. Vector graphics also excel in graphic design, typography, and any situation where scalability and accuracy are key.

In summary, the choice between raster images and vector graphics hinges on the specific needs of a project. Raster images deliver lifelike detail, while vector graphics offer unmatched scalability and precision. By understanding the strengths of each approach, digital creators can make informed decisions to ensure their visual content meets the demands of their creative vision and practical requirements.

Image Enhancement

Image Distortion

In an ideal imaging system, straight lines on the object plane are also displayed straight on the image plane. In practice, however, this does not always work. Image distortion happens when the straight lines of an image appear to be warped or curled in an unnatural way compared to their natural state. There are two types of distortion. Optical distortion and perspective distortion. Both cause some sort of distortion to images, some a little more and some a little less. While optical distortion is caused by the optical design of camera lenses and is therefore often referred to as "lens distortion", perspective distortion is caused by the position of the subject within the image. It's worth mentioning that optical distortion behaves differently depending on the lens system and whether the lens can be removed from the camera or not.

Optical distortion

Optical distortion deforms and curves straight lines so that they appear curved in images. Optical distortion is the result of optical design when special lens elements are used to reduce spherical and other kinds of aberrations. In short, optical distortion is a defect in the lens.

Three popular types of optical distortions are:

- Barrel distortion

- Pincushion distortion

- Mustache distortion

The three types of optical distortion are discussed in more detail below.

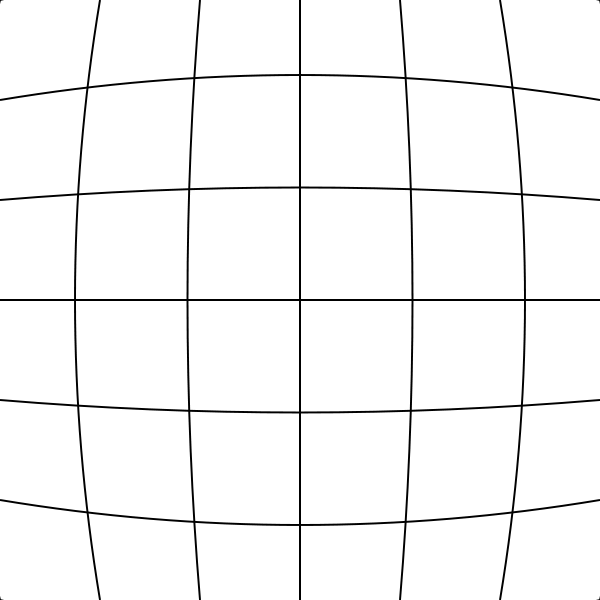

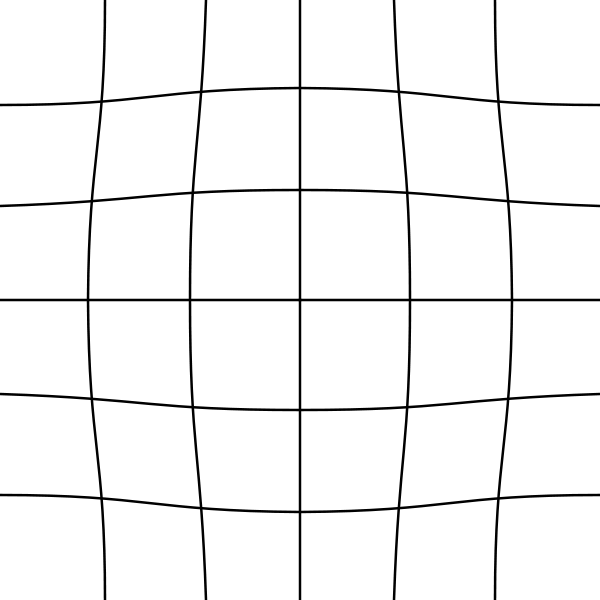

Barrel distortion

When straight lines are curved inward in a barrel shape, this type of aberration is called barrel distortion. Barrel distortion is typically present in most wide-angle fixed focal length lenses and many zoom lenses with relatively short focal lengths. For example, barrel distortion occurs in wide-angle lenses because the field of view of the lens is much larger than the size of the image sensor. Therefore, \"squeezing\" must be done. As a result, straight lines are visibly bent inward, especially toward the outermost edges of the image. The following figure shows this type of distortion.

@fig:barrel-distortion

Notice that the lines appear straight in the center of the image and only begin to bend away from the center. This is because the image is the same along the optical axis (at the center of the lens), but its magnification decreases toward the corners. The amount of distortion can vary depending on the distance between the camera and the subject.

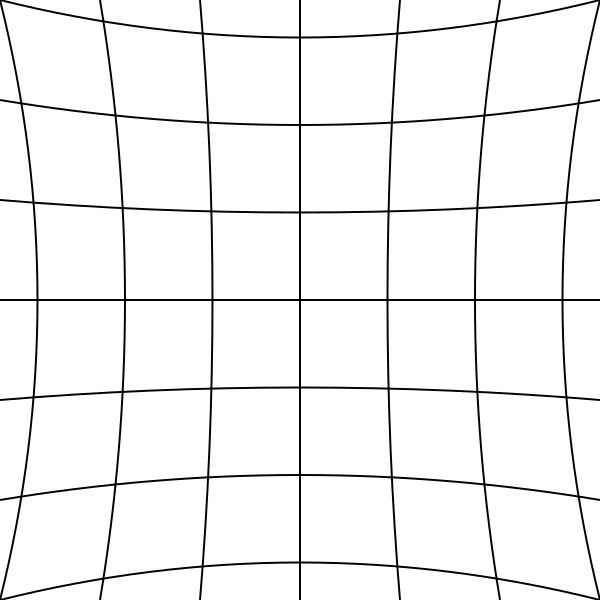

Pincushion distortion

Pincushion distortion is the exact opposite of barrel distortion. The straight lines are curved outward from the center. This type of distortion is common in telephoto lenses and is caused by the magnification increasing from the optical axis towards the edges of the image. This time, the field of view is smaller than the size of the image sensor and must therefore be stretched. As a result, straight lines appear to be drawn upward in the corners. The following figure shows this type of distortion.

@fig:pincushion-distortion

Expensive professional cameras have elements that can significantly reduce the effect. The pincushion distortion can be very strong with lenses of simple cameras.

Mustache distortion

The meanest type of distortion is mustache distortion. It is also called \"wavy\" distortion and is basically a combination of barrel distortion and pincushion distortion. Straight lines appear curved inward toward the center of the frame, then curve outward at the outermost corners. The following figure shows this type of distortion.

@fig:mustache-distortion

This type of distortion is complex. It could potentially be fixed, but that requires special methods.

Perspective distortion

Perspective distortion, unlike optical distortion, has nothing to do with the optics of the lens and is therefore not a fault of the lens. When you project a three-dimensional space into a two-dimensional image, if the subject is too close to the camera, it may appear disproportionately large or distorted compared to the objects in the background. This is a normal occurrence and something you can easily see with your own eyes. For example, if you shoot a person up close with an ultra-wide-angle lens, the nose, eyes, and lips may appear unrealistically large, while the ears look extremely small or even disappear from the image altogether.

When we talk about perspective distortions or errors, we mean that lines that are parallel in reality move towards each other in the photo. What is called an error in this case, however, is actually a completely normal phenomenon of spatial perception: the further away an object is, the smaller it appears.

Calibration

Image calibration, i.e. the correction of image distortions, is one of the tasks of image processing. A corrected image is used as a starting point for a number of image processing applications, for example:

- the exact determination of the position of objects in the object space

- the exact detection of the object geometry, which is described by circumference, area, distances, etc,

- the search for objects in the image with undistorted search templates.

Due to various influences during the creation of the image, the object and the image deviate more or less from each other. However, for many applications this is not a disadvantage.

In some cases, we have to make corrections to the image. In this case, the geometric arrangement of the pixels is changed so that the originally distorted image \(G(x,y)\) becomes a corrected image \(G'(x',y')\), where the pixel values must not change, the correction formula is:

Where the functions \(x'(x,y)\) and \(y'(x,y)\) depend on \(x\) and \(y\). In other words, the transformed coordinates \(x'\) and \(y'\) are functions of \(x\) and \(y\), the coordinates of the distorted image.

The functions \(x'(x,y)\) and \(y'(x,y)\) can be presented by polynomials:

In some cases, the calibration can already be described with the linear parts of the above polynomials. In such cases, it can be simplified to:

The above linear mapping equations have the property to transform each parallel lines into parallel lines again, so that between the image and the transformed image are still related. For this reason they are also called affine (related) transformations. The coefficients and specify the shift of the image in the x and b axis in x and y direction. Otherwise, the coefficients , , and determine the transformation. The example of affine transformations with various coefficients are presented in the following table:

Image calibration, linear mappings coefficients

| \(a_1\) | \(a_2\) | \(b_1\) | \(b_2\) | Image transformation |

|---|---|---|---|---|

| 1 | 0 | 0 | 1 | same image |

| \(cos(\alpha)\) | \(-sin(\alpha)\) | \(sin(\alpha)\) | \(cos(\alpha)\) | Image rotation around the zero point by the angle \(\alpha\) |

| \(\beta_x\) | 0 | 0 | \(\beta_y\) | Scaling of the image by the factors \(\beta_x\) and \(\beta_y\) in \(x\) and \(y\) directions |

| 1 | \(tan(\alpha)\) | 0 | 1 | Shear in \(x\) around angle \(\alpha\) |

| 1 | 0 | \(tan(\beta)\) | 1 | Shear in \(y\) around angle \(\beta\) |

In the above table, there are further coefficient value possible, we have mentioned here only few of the most important ones.

However, images often exhibit distortions that can only be corrected with the help of nonlinear transformations \(x'(x,y)\) and \(y'(x,y)\).

Thereby the causes of the distortions determine the function type. For example, barrel and pincushion distortions can be corrected by the following functions:

The parameter \(a\) is determined by the strength and type of distortion. It has the value zero if the image is distortion-free. For barrel distortion correction it takes negative values, for pincushion distortion correction positive values.

Image Filtering

Filters allow you to extract specific information from an image while eliminating unwanted information. As a result, the desired features are enhanced compared to their surroundings. The filtering process is associated with a reduction of the available information. In a sense, a filter can be thought of as an operator applied to a source image to create a new, filtered target image. The calculation is done in accordance with the mathematical calculations specific to the filter.

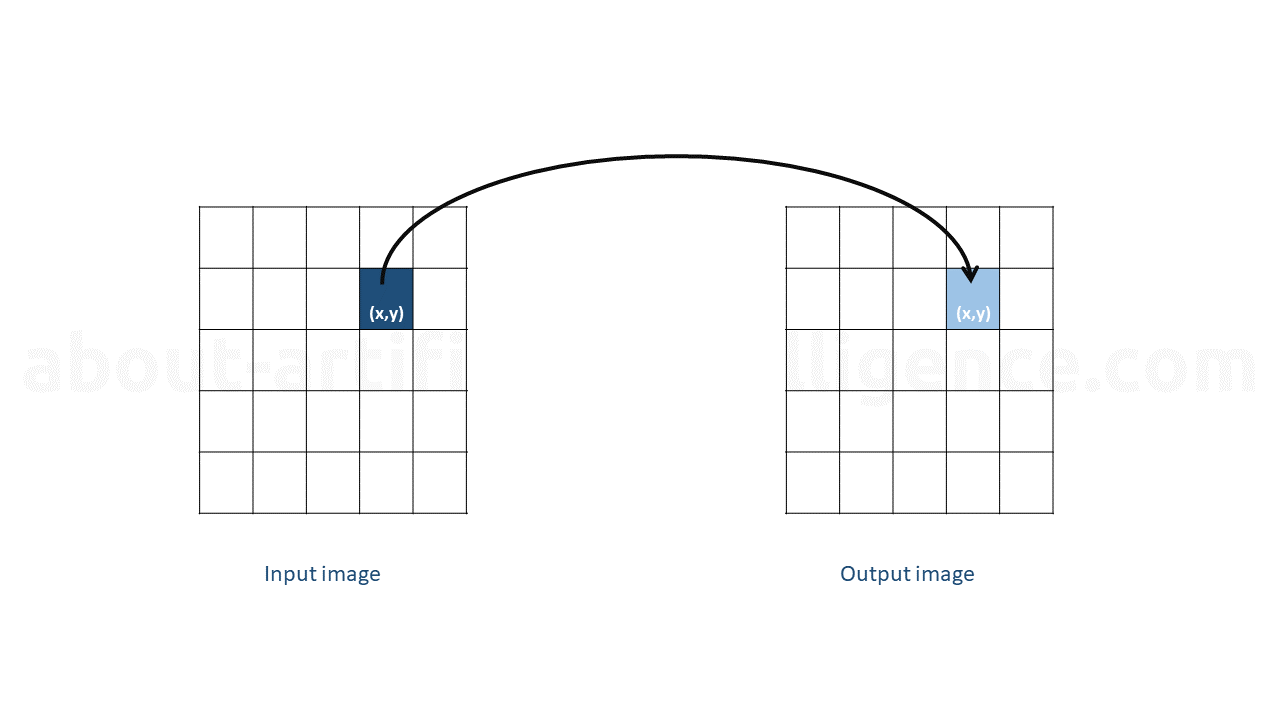

Point operators

Point operators are often used as a means of improving contrast and correcting brightness, to name just a few examples. The name of this class of operators comes from the fact that a new pixel in the output image is calculated based only on the value of the pixel and its position in the original image, i.e. the neighboring pixels are not taken into account. Some filters of this kind are arithmetic operations between images, contrast enhancement and brightness correction. The following figure shows how a point operator filter works at the pixel level.

@fig:point-operators

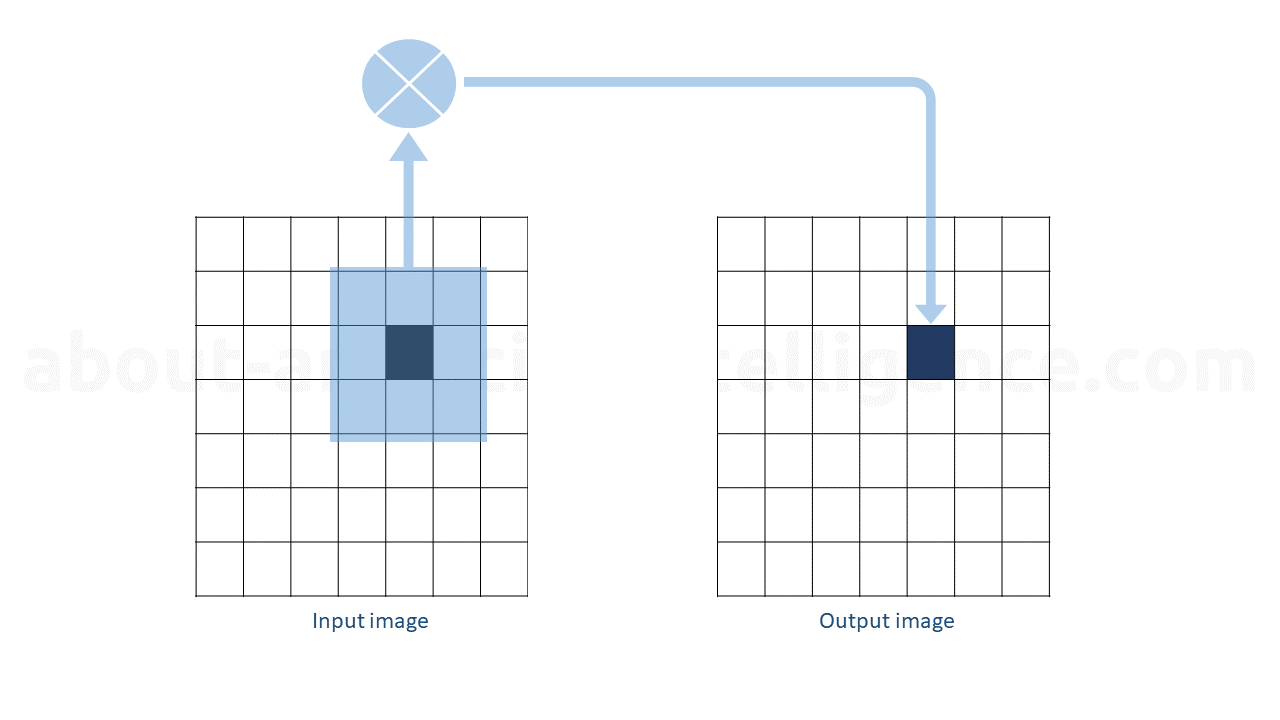

Linear local filters

The filtering of an image by means of a linear local filter is carried out by a mathematical convolution on the respective image section with a filter matrix. This filter matrix weights the pixels when calculating the new pixel in the resulting image. The following figure illustrates graphically the idea behind a linear local filter. The mathematical convolution is performed for each of the pixels of the original image and thereby the pixels in the new image are calculated.

@fig:linear-local-filters

For such a system, the output h(\(i,j\)\) corresponds to the convolution of an input function f(\(i,j\)\) with the impulse response g(\(i,j\)\) and is defined as follows defined:

If h and g are image matrices, the convolution corresponds to the calculation of weighted sums of the pixels of an image. The impulse response f(\(i,j\)\) is called the convolution mask or filter kernel. For each pixel of an image g(\(i,j\)\) the value h(\(i,j\)\) is calculated by shifting the filter kernel f to the pixel (\(i,j\)\) and calculating the weighted sum of the pixels in the neighborhood of $$ i,j$$ is formed. Here the individual weighting factors correspond to the values of the filter kernel.

This type of filter design is very diversified and is used in a wide variety of functions with different filter matrices. Some areas of its application are sharpening, blurring, noise reduction and edge detection.

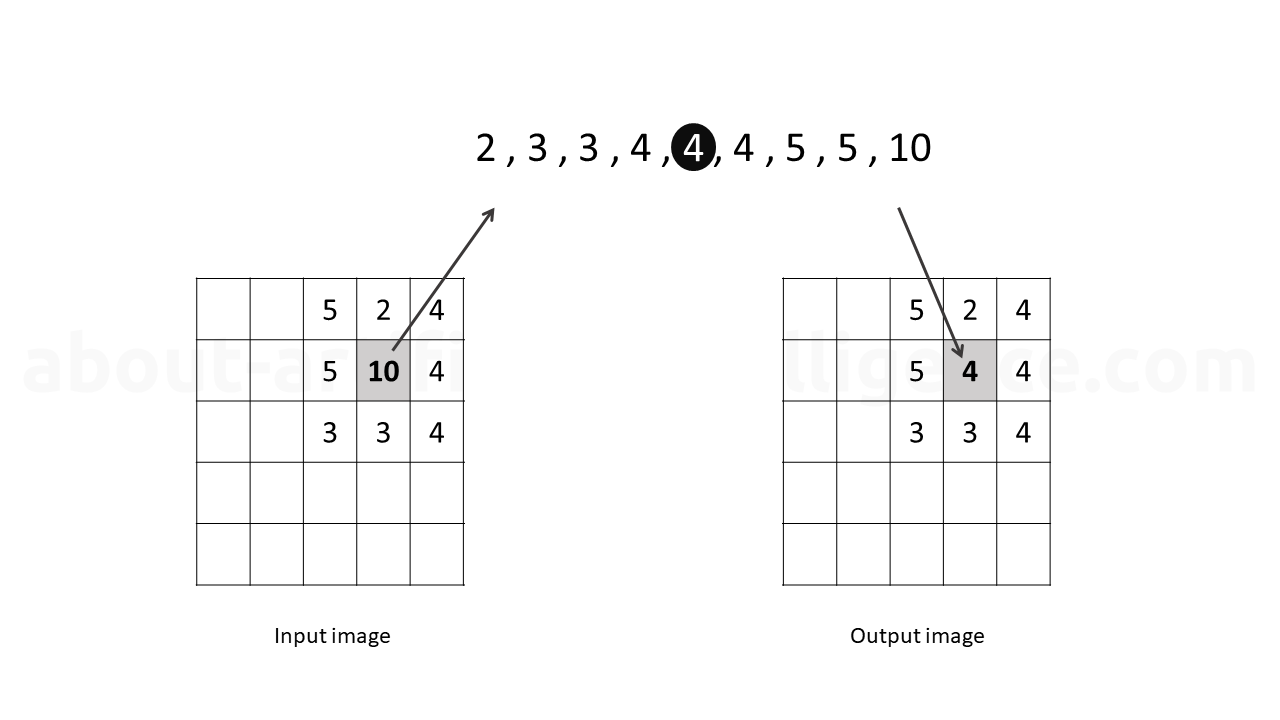

Nonlinear local filters

Unlike linear filters, nonlinear filters do not perform mathematical convolution. Instead, sorting and selection operations are used in the filter matrix that moves across the image. For example, in the following figure, a nonlinear filtering is shown using a median filter. In the first step, the surrounding pixels around the central pixel is sorted by brightness value. In the second step, the median is selected and used as the new value for the pixels of the resulting image.

@fig:nonlinear-median

Similar to the median filter, minimum and maximum filters can also be utilized. With these filters, the output of the filter does not correspond to the median value of the brightness of the neighborhood, but to the minimum or maximum brightness value g of the neighborhood N: