Single-Layer Perceptrons

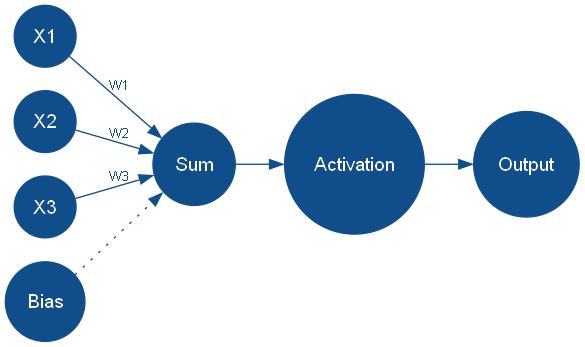

A single-layer perceptron is like a building block in a neural network. It's made up of one layer of artificial neurons, called perceptrons, placed one after the other. Each perceptron does some math with the inputs it gets, and then decides whether to give an output or not. This decision is made using something called an "activation function," which helps the network make choices.

One cool thing about single-layer perceptrons is that they can learn from data. It's like they're getting smarter over time. This learning process is about changing the weights connected to each input, so the output becomes closer to what we want it to be. The process follows a set of rules called the "perceptron learning rule." It keeps adjusting the weights until things look good.

Think of a perceptron as a tiny decision-maker. It takes a bunch of inputs, plays around with their weights, adds them up, and then passes the result through the activation function. This helps it decide if it should say "yes" or "no" to a question. Here's how it looks in math:

Let:

- \(x_1, x_2, \ldots, x_n\) be the input values.

- \(w_1, w_2, \ldots, w_n\) be the corresponding weights.

- \(b\) be the bias term.

- \(z\) be the weighted sum of inputs and bias: \(z = \sum_{i=1}^{n} (w_i \cdot x_i) + b\).

- \(a\) be the output after applying an activation function: \(a = \text{activation}(z)\).

Mathematically, the output \(a\) of the perceptron can be written as:

The activation function is usually a step function, sigmoid function, or ReLU (Rectified Linear Unit), depending on the specific design of the perceptron and the neural network it's a part of.

Single-layer perceptrons serve as the building blocks of more complex neural networks. Their simplicity and effectiveness make them an essential topic in the study of artificial intelligence. By understanding the structure, learning algorithms, and limitations of single-layer perceptrons, we can gain valuable insights into the foundations of neural networks and their applications in solving real-world problems.

You can see a simple representation of a perceptron in action in single-layer-perceptrons.

Perceptron Learning Algorithm

The perceptron learning algorithm is used to adjust the weights and bias of a perceptron in response to labeled training data, allowing it to learn patterns and make accurate predictions. The algorithm follows these steps:

Here's a simplified description of how the perceptron learning algorithm works:

1. Initialization:

- Initialize the weights \(w\) and bias \(b\) to small random values or zeros.

2. Forward Pass:

- For each training example, compute the weighted sum of inputs: \(z = \sum_{i=1}^{n} w_ix_i + b\), where \(x_i\) is the input feature, \(w_i\) is the weight associated with that feature, \(b\) is the bias term, and \(n\) is the number of input features.

- Apply a thresholding function, such as the step function or a sigmoid function, to \(z\). If the result is greater than or equal to a certain threshold, the perceptron outputs one class (e.g., 1); otherwise, it outputs the other class (e.g., 0).

3. Error Calculation and Weight Updates:

- If the perceptron's output for a training example is incorrect, an error is computed as the difference between the predicted output and the true target value (0 or 1).

- The weights are updated using a learning rate (\(\alpha\)) and the error. The general weight update rule is:

- The bias term \(b\) is updated in a similar manner, where \(\alpha\) is the learning rate.

4. Repeat:

- Steps 2 and 3 are repeated for each training example in the dataset.

- The process may go through multiple iterations (epochs) over the entire dataset until the perceptron's performance improves, and the classification error decreases.

The perceptron training algorithm aims to adjust the weights and bias so that the perceptron correctly classifies as many training examples as possible. However, the perceptron is limited to linearly separable problems; it can only learn tasks where a linear decision boundary exists. For more complex tasks, multiple perceptrons or more advanced neural network architectures are required.

Activation Functions in Perceptrons

Activation functions play an important role in the behavior and learning capabilities of artificial neural networks, including perceptrons. These functions introduce non-linearity to the network, enabling it to capture and represent complex relationships in data. Several commonly used activation functions, including the step function, sigmoid function, and rectified linear unit (ReLU), have been pivotal in shaping the field of neural networks.

One of the simplest activation functions is the Step Function. Sometimes called the Heaviside step function, it's a basic tool used in neural networks. This function takes an input and gives a result of either 0 or 1, kind of like an on-off switch. It decides this based on a specific point we call the "threshold." Here's how it works:

Imagine you have a number, let's call it \(x\). If \(x\) is smaller than the threshold, the output will be 0. But if \(x\) is equal to or bigger than the threshold, the output becomes 1.

In a mathematical way, it looks like this:

Even though the step function is quite simple, it has a limitation. It can't really show gradual changes or smooth transitions between values. This makes it not very good for lots of real-world problems. In the past, people used it in early versions of neural networks, like perceptrons. But nowadays, we have better options – more flexible tools called activation functions. We'll learn about some of these in the next section, especially the ones used in multi-layer perceptrons.

Limitations of Single-Layer Perceptrons

Single-layer perceptrons, while valuable in specific contexts, exhibit certain limitations that must be considered when applying them in machine learning and pattern recognition tasks. These constraints include:

- Linear Separability Constraint: Single-layer perceptrons are restricted to handling linearly separable data, which limits their ability to model complex, non-linear relationships between input and output.

- Inability to Handle Complex Patterns: They struggle with capturing intricate patterns or recognizing non-linear associations in data, making them unsuitable for many real-world applications.

- Inefficient Function Approximation: Single-layer perceptrons struggle to efficiently approximate certain functions, especially those that require more intricate transformations or complex decision boundaries.

- Limited Representation Power: Compared to multi-layer architectures like neural networks, single-layer perceptrons have restricted representation capabilities, which can hinder their performance on intricate tasks.

- Convergence Issues: Some problems may pose convergence challenges for single-layer perceptrons, making it difficult for them to find optimal weight values during training.

- Lack of Hierarchical Learning: Single-layer perceptrons lack the capacity to learn hierarchical features, limiting their ability to model high-level abstractions in data.

Applications of Single-Layer Perceptrons

Conversely, single-layer perceptrons find their niche in various practical applications where their simplicity aligns with the task requirements. Some of the key applications include:

- Basic Linear Classification: Single-layer perceptrons find utility in straightforward linear classification tasks where data can be separated by a single hyperplane.

- Logical Operations: They excel in performing elementary logical operations like AND, OR, and NOT, which are inherently linearly separable.

- Simple Pattern Recognition: For tasks involving simple and linear patterns, single-layer perceptrons can be effective.

- Binary Decision Problems: They are well-suited for binary decision problems where the goal is to categorize data into one of two classes based on linear criteria.

- Linear Regression: Single-layer perceptrons are useful for linear regression problems, where the objective is to approximate linear functions and predict continuous output values.

While single-layer perceptrons have limitations in handling complex data relationships, they remain valuable tools in scenarios where linear separability and simplicity are paramount, such as in basic classification tasks and logical operations. However, for more intricate and non-linear problems, multi-layer neural networks offer enhanced modeling capabilities.