Advanced NLP Techniques

Advanced Natural Language Processing (NLP) techniques refer to a set of methods and approaches that go beyond the basics of NLP to tackle more complex and nuanced language-related tasks. These techniques leverage machine learning, deep learning, and other AI methodologies to understand, generate, and manipulate human language in more sophisticated ways. Some advanced NLP techniques include:

Named Entity Recognition

Named Entity Recognition (NER) is a fundamental task in Natural Language Processing (NLP) that involves identifying and classifying named entities (also known as named entities or NEs) within a text. Named entities are words or phrases that refer to specific objects, people, locations, organizations, dates, monetary values, and more. NER plays a crucial role in extracting structured information from unstructured text data, enabling machines to understand and work with real-world information.

Here's how NER works:

-

Text Input: NER begins with a piece of text, which can be a sentence, paragraph, or entire document. This text can be in the form of news articles, social media posts, medical records, or any other textual data.

-

Entity Recognition: The NER system processes the text and identifies words or phrases that represent named entities. These named entities can belong to predefined categories such as:

- PERSON: Names of people.

- ORGANIZATION: Names of companies, institutions, or organizations.

- LOCATION: Names of places, cities, countries, or geographic regions.

- DATE: References to specific dates or time expressions.

- MONEY: Monetary values, including currency symbols.

- PERCENT: Percentage values.

- PRODUCT: Names of products or goods.

- EVENT: Names of events, conferences, or gatherings.

- LANGUAGE: Names of languages.

-

And more, depending on the application.

-

Entity Classification: After identifying named entities, the NER system classifies them into the appropriate categories. For example:

- "John Smith" might be classified as a PERSON.

- "Apple Inc." might be classified as an ORGANIZATION.

- "New York" might be classified as a LOCATION.

-

"January 1, 2023" might be classified as a DATE.

-

Output: The NER system typically provides the recognized named entities along with their corresponding categories. This structured output allows applications to extract specific information from text, such as extracting names of people, organizations, or dates from news articles.

Example:

Consider the following sentence:

"Apple Inc. was founded by Steve Jobs, Steve Wozniak, and Ronald Wayne on April 1, 1976, in Cupertino, California."

In this sentence, a NER system would recognize and classify the following named entities:

- "Apple Inc.": ORGANIZATION

- "Steve Jobs": PERSON

- "Steve Wozniak": PERSON

- "Ronald Wayne": PERSON

- "April 1, 1976": DATE

- "Cupertino, California": LOCATION

NER is widely used in various applications, including information retrieval, document indexing, question answering systems, chatbots, sentiment analysis, and more, where understanding and extracting specific information from unstructured text data is essential.

Sentiment Analysis

Introduction:

Sentiment analysis, also known as opinion mining, is a technique used in Natural Language Processing (NLP) to determine the sentiment or emotional tone expressed in a piece of text. It involves analyzing and classifying subjective information, such as opinions, attitudes, and emotions, expressed by individuals towards a particular topic, product, or service. Sentiment analysis plays a crucial role in understanding public opinion, customer feedback, social media trends, and market research.

Explanation:

Sentiment analysis utilizes various NLP techniques to extract and analyze sentiment from text data. It involves several steps, including text preprocessing, feature extraction, sentiment classification, and evaluation.

-

Text Preprocessing: The first step is to clean and preprocess the text data by removing noise, such as punctuation, stop words, and special characters. This helps in reducing the complexity of the data and improving the accuracy of sentiment analysis.

-

Feature Extraction: After preprocessing, relevant features are extracted from the text, such as words, phrases, or n-grams. These features serve as inputs for sentiment classification algorithms.

-

Sentiment Classification: Sentiment classification involves assigning sentiment labels to the extracted features. This can be done using various techniques, including rule-based approaches, machine learning algorithms, or deep learning models. Rule-based approaches use predefined rules and lexicons to classify sentiment, while machine learning and deep learning models learn patterns and relationships from labeled training data.

-

Evaluation: The final step is to evaluate the performance of the sentiment analysis model. This is done by comparing the predicted sentiment labels with the ground truth labels. Evaluation metrics such as accuracy, precision, recall, and F1 score are commonly used to assess the model's performance.

Example:

Let's consider an example of sentiment analysis in the context of customer reviews for a restaurant. Suppose we have a customer review that says, "The food at XYZ restaurant was delicious, and the service was excellent. I highly recommend it!"

Using sentiment analysis, the text would be processed and classified as positive sentiment. The positive sentiment is inferred from the positive words like "delicious," "excellent," and "highly recommend." This analysis helps in understanding the customer's positive experience with the restaurant.

Similarly, if another review says, "The food at XYZ restaurant was terrible, and the service was slow. I would never go back!", sentiment analysis would classify it as negative sentiment. The negative sentiment is inferred from words like "terrible," "slow," and "never go back." This analysis helps in identifying areas of improvement for the restaurant.

By analyzing a large volume of customer reviews using sentiment analysis, businesses can gain insights into customer satisfaction, identify trends, and make data-driven decisions to enhance their products or services.

Overall, sentiment analysis is a powerful NLP technique that enables organizations to understand and leverage the sentiment expressed in text data, leading to improved customer experiences, better decision-making, and enhanced business outcomes.

Text Summarization

Introduction:

Text summarization is a crucial task in Natural Language Processing (NLP) that aims to condense a given piece of text while retaining its key information. It involves the extraction or generation of a concise summary that captures the main points and important details of the original text. Text summarization plays a vital role in various applications, such as news summarization, document summarization, and even in chatbots or virtual assistants.

Explanation:

There are two main approaches to text summarization: extractive and abstractive summarization.

-

Extractive Summarization: Extractive summarization involves selecting and combining the most important sentences or phrases from the original text to create a summary. This approach relies on identifying salient information, such as keywords, named entities, or sentences with high information content. Extractive summarization techniques often use statistical or graph-based algorithms to rank sentences based on their relevance and importance. The selected sentences are then combined to form a coherent summary.

-

Abstractive Summarization: Abstractive summarization, on the other hand, aims to generate a summary by understanding the meaning of the original text and expressing it in a concise and coherent manner. This approach involves natural language generation techniques, such as language models, neural networks, or deep learning architectures. Abstractive summarization goes beyond simply extracting sentences and allows for more flexibility in summarizing the text. It can generate summaries that may not be present in the original text but still capture its essence.

Example:

Consider the following news article:

"Scientists have discovered a new species of butterfly in the Amazon rainforest. The butterfly, named Amazonia Blue, has vibrant blue wings with intricate patterns. It is believed to be endemic to the region and plays a crucial role in pollination. The discovery of this species highlights the importance of preserving the Amazon rainforest and its unique biodiversity."

Extractive summary: "A new species of butterfly, Amazonia Blue, has been found in the Amazon rainforest. It has vibrant blue wings and plays a crucial role in pollination. This discovery emphasizes the need to protect the region's biodiversity."

Abstractive summary: "Scientists have recently identified a new butterfly species, Amazonia Blue, in the Amazon rainforest. With its vibrant blue wings and intricate patterns, this endemic species plays a vital role in pollination. The discovery underscores the significance of preserving the unique biodiversity of the Amazon rainforest."

In this example, the extractive summary selects and combines important sentences from the original text, while the abstractive summary generates a concise summary by understanding the context and expressing it in a more coherent manner.

Text summarization techniques continue to evolve, leveraging advancements in NLP and AI, and are essential in enabling efficient information retrieval and comprehension in various domains.

Language Generation

Introduction:

Language generation is a fundamental concept in Natural Language Processing (NLP) that focuses on the generation of human-like text or speech by machines. It involves the use of advanced algorithms and techniques to generate coherent and contextually relevant sentences, paragraphs, or even entire documents. Language generation plays a crucial role in various applications, such as chatbots, virtual assistants, machine translation, and content generation.

Explanation:

Language generation in NLP involves the use of sophisticated models and algorithms to generate text that is indistinguishable from human-generated text. It goes beyond simple rule-based systems and involves the utilization of machine learning techniques, such as deep learning and neural networks, to capture the complexities of human language.

One popular approach to language generation is the use of Recurrent Neural Networks (RNNs) or its variants, such as Long Short-Term Memory (LSTM) or Gated Recurrent Units (GRUs). These models are trained on large amounts of text data and learn to generate text by predicting the next word or character based on the context provided.

Another approach is the use of Generative Adversarial Networks (GANs), which consist of a generator network and a discriminator network. The generator network generates text samples, while the discriminator network tries to distinguish between real and generated text. Through an adversarial training process, the generator network learns to generate more realistic and coherent text.

Example:

Let's consider an example of language generation in the context of a chatbot. Suppose we have a chatbot designed to assist users with travel-related queries. When a user asks, "What are some popular tourist attractions in Paris?", the chatbot can generate a response like:

"Paris is known for its iconic landmarks and attractions. Some popular tourist spots include the Eiffel Tower, Louvre Museum, Notre-Dame Cathedral, and the Champs-Élysées. These places offer a glimpse into the rich history and culture of the city."

In this example, the chatbot generates a coherent and informative response by leveraging language generation techniques. It understands the user's query, retrieves relevant information from its knowledge base, and generates a response that provides useful information about popular tourist attractions in Paris.

Language generation in NLP enables machines to communicate effectively with humans, providing valuable information and assistance in various domains. It continues to evolve with advancements in deep learning and other AI techniques, making it an exciting area of research and development in the field of Artificial Intelligence.

Machine Translation

Introduction:

Machine Translation (MT) is a subfield of Natural Language Processing (NLP) that focuses on the automatic translation of text or speech from one language to another. It aims to bridge the language barrier by enabling communication and understanding between people who speak different languages. MT systems have evolved significantly over the years, thanks to advancements in computational power, linguistic resources, and machine learning techniques.

Explanation:

Machine Translation involves the use of algorithms and statistical models to analyze and understand the structure and meaning of sentences in one language and generate equivalent sentences in another language. The process typically consists of three main steps: analysis, transfer, and generation.

-

Analysis: In this step, the source language sentence is analyzed to identify its grammatical structure, parts of speech, and semantic meaning. This involves tokenization, morphological analysis, syntactic parsing, and semantic analysis. The goal is to extract as much linguistic information as possible from the source sentence.

-

Transfer: Once the analysis is complete, the system applies rules or patterns to convert the analyzed information into a representation that can be easily transferred to the target language. This step involves mapping the source language structures and meanings to their corresponding structures and meanings in the target language.

-

Generation: In the final step, the system generates the translated sentence in the target language based on the transferred information. This involves selecting appropriate words, arranging them in a grammatically correct manner, and ensuring the translated sentence conveys the intended meaning.

Example:

Let's consider an example to illustrate machine translation. Suppose we have the following sentence in English:

"Can you please pass me the salt?"

If we want to translate this sentence into French, a machine translation system would go through the analysis, transfer, and generation steps to produce the translated sentence:

"Peux-tu s'il te plaît me passer le sel ?"

In this example, the machine translation system analyzes the English sentence, identifies the verb "pass," the object "me," and the noun "salt." It then transfers this information to the French structure, selecting the appropriate verb "passer," the pronoun "me," and the noun "sel." Finally, it generates the translated sentence, which conveys the same meaning as the original sentence in English.

It's important to note that machine translation is a challenging task due to the complexities of language, including idiomatic expressions, cultural nuances, and ambiguity. While machine translation systems have made significant progress, they still face limitations and may produce translations that are not always accurate or natural-sounding. Ongoing research and advancements in NLP techniques, such as neural machine translation, aim to improve the quality and fluency of machine translations.

Coreference Resolution

Introduction:

In the field of Natural Language Processing (NLP), coreference resolution is a crucial task that aims to identify and link expressions within a text that refer to the same entity. It plays a vital role in understanding the relationships between different parts of a text and is essential for various NLP applications such as information extraction, question answering, and text summarization. Coreference resolution helps in creating a coherent representation of the text by resolving pronouns, definite noun phrases, and other referring expressions to their corresponding antecedents.

Explanation:

Coreference resolution involves two main steps: mention detection and mention clustering. Mention detection involves identifying all the expressions in a text that refer to an entity. These expressions can be pronouns (e.g., he, she, it), definite noun phrases (e.g., the car, this book), or other referring expressions. Mention clustering, on the other hand, aims to group together all the mentions that refer to the same entity.

To perform coreference resolution, various techniques and algorithms have been developed. These techniques can be rule-based, statistical, or based on machine learning approaches. Rule-based approaches rely on handcrafted linguistic rules to identify and link mentions. Statistical approaches utilize features such as gender, number, and syntactic patterns to determine coreference. Machine learning approaches involve training models on annotated datasets to learn patterns and make predictions.

Example:

Consider the following example:

"John went to the store. He bought some groceries."

In this example, the pronoun "He" refers to the entity mentioned earlier, which is "John." Coreference resolution would identify the pronoun "He" as a mention and link it to the antecedent "John." This linking helps in understanding that both mentions refer to the same entity.

Coreference resolution becomes more challenging when dealing with ambiguous references or complex sentences. For instance:

"John saw Mary, and he told her that he loves her."

In this example, there are multiple pronouns ("he" and "her") that need to be resolved to their respective antecedents. Coreference resolution algorithms need to consider the context and syntactic structure to correctly link the pronouns to the appropriate entities.

Overall, coreference resolution is a fundamental task in NLP that enables machines to understand the relationships between different parts of a text and create a coherent representation. It is an active area of research, and advancements in this field continue to improve the performance of NLP systems.

Dependency Parsing

Introduction:

Dependency parsing is a fundamental technique in Natural Language Processing (NLP) that aims to analyze the grammatical structure of a sentence by identifying the relationships between words. It involves determining the syntactic dependencies between words, where each word is assigned a specific role or function in the sentence. Dependency parsing plays a crucial role in various NLP tasks, such as information extraction, machine translation, sentiment analysis, and question answering.

Explanation:

Dependency parsing represents the relationships between words in a sentence using directed edges, known as dependencies. These dependencies form a tree-like structure called a dependency tree or a syntactic parse tree. The nodes of the tree represent the words in the sentence, and the edges represent the grammatical relationships between them.

In dependency parsing, each word in the sentence is assigned a specific role or function, such as a subject, object, modifier, or conjunction. The dependencies between words are typically represented using labels that describe the relationship between the head word (governing word) and the dependent word.

There are different algorithms and models used for dependency parsing, including rule-based approaches, statistical models, and neural network-based models. These models learn from annotated data to predict the most likely dependency structure for a given sentence.

Example:

Consider the following sentence: "John eats an apple."

The dependency parse tree for this sentence would look like:

In this example, "eats" is the main verb and acts as the root of the tree. "John" and "apple" are the dependent words, with "John" being the subject and "apple" being the object. The labels "NOUN" indicate the part-of-speech tags for each word.

By analyzing the dependency parse tree, we can understand the grammatical structure of the sentence and the relationships between the words. This information can be used for various NLP tasks, such as extracting information about who is performing the action and what is being acted upon.

Dependency parsing is a powerful technique in NLP that enables machines to understand the syntactic structure of sentences, facilitating more advanced language processing and analysis.

Question Answering

Question Answering (QA) focuses on developing systems capable of understanding and responding to questions posed in natural language. The goal of QA is to enable machines to comprehend human queries and provide accurate and relevant answers, similar to how a human expert would respond.

QA systems employ various techniques from NLP, including information retrieval, text mining, and machine learning, to process and analyze large amounts of textual data in order to generate appropriate responses. These systems typically consist of three main components: a question parser, an information retrieval module, and an answer generation module.

The question parser is responsible for understanding the structure and semantics of the question. It breaks down the question into its constituent parts, such as the subject, object, and any specific keywords or entities mentioned. This step is crucial for identifying the type of question being asked and determining the appropriate approach for finding the answer.

The information retrieval module retrieves relevant information from a knowledge base or a large corpus of text. It uses techniques such as keyword matching, semantic similarity, and entity recognition to identify relevant documents or passages that may contain the answer. This module aims to narrow down the search space and retrieve the most relevant information to improve the accuracy of the answer.

The answer generation module takes the retrieved information and generates a concise and accurate response. It may involve techniques such as summarization, paraphrasing, or even generating a new sentence based on the retrieved information. The answer generation module also considers the context of the question and the user's intent to provide a more meaningful and relevant response.

Example:

Let's consider an example to illustrate how a QA system works. Suppose we have a QA system designed to answer questions about famous historical figures. A user poses the following question: "Who was the first President of the United States?"

The question parser identifies the question type as a "who" question and recognizes the keywords "first," "President," and "United States." The information retrieval module then searches through a database of historical figures and retrieves relevant information about the first President of the United States, which is George Washington.

Finally, the answer generation module takes the retrieved information and generates the response: "The first President of the United States was George Washington."

In this example, the QA system successfully understands the question, retrieves the relevant information, and generates an accurate answer. This demonstrates the power of QA systems in understanding and responding to natural language queries.

Topic Modeling

Introduction:

In the field of Natural Language Processing (NLP), Topic Modeling is a powerful technique used to uncover hidden themes or topics within a collection of documents. It is widely employed in various applications such as information retrieval, text mining, and recommendation systems. By automatically identifying and extracting the underlying topics, Topic Modeling enables us to gain insights into large volumes of text data and organize it in a meaningful way.

Explanation:

Topic Modeling is a statistical modeling technique that aims to discover the latent semantic structure of a corpus. It assumes that each document in the corpus is a mixture of different topics, and each topic is characterized by a distribution of words. The goal is to uncover these topics and their associated word distributions without any prior knowledge or supervision.

One of the most popular algorithms for Topic Modeling is Latent Dirichlet Allocation (LDA). LDA assumes that documents are generated through a two-step process: first, a set of topics is randomly assigned to each document, and then, words are generated based on the topic distribution of the document. The algorithm iteratively updates the topic assignments and word distributions until it converges to a stable solution.

Example:

Let's consider a collection of news articles related to technology. We want to uncover the underlying topics within this corpus using Topic Modeling. After applying LDA, we might discover the following topics:

- Topic 1: Artificial Intelligence and Machine Learning

-

Words: AI, machine learning, deep learning, neural networks, algorithms

-

Topic 2: Data Science and Analytics

-

Words: data analysis, data visualization, predictive modeling, statistics, big data

-

Topic 3: Cybersecurity and Privacy

-

Words: hacking, encryption, network security, privacy, data breaches

-

Topic 4: Internet of Things (IoT)

- Words: smart devices, sensors, connectivity, automation, IoT platforms

By analyzing the word distributions of these topics, we can understand the main themes present in the collection of news articles. This information can be used for various purposes, such as organizing the articles into relevant categories, recommending related articles to users, or extracting key insights from the data.

Topic Modeling provides a valuable tool for understanding and organizing large volumes of text data, enabling us to uncover hidden patterns and gain meaningful insights. Its applications extend beyond news articles and can be applied to various domains, making it an essential technique in the field of Natural Language Processing.

Conversational AI

Introduction:

Conversational AI is a branch of Natural Language Processing (NLP) that focuses on developing intelligent systems capable of engaging in human-like conversations. It aims to enable machines to understand, interpret, and respond to natural language inputs, allowing for more interactive and dynamic interactions between humans and machines. Conversational AI has gained significant attention in recent years due to its potential applications in chatbots, virtual assistants, customer service, and other areas where human-like conversation is desired.

Explanation:

Conversational AI systems utilize various NLP techniques to understand and generate human-like responses. These techniques include natural language understanding (NLU), natural language generation (NLG), dialogue management, and sentiment analysis, among others.

NLU is responsible for extracting meaning and intent from user inputs. It involves techniques such as named entity recognition, part-of-speech tagging, and syntactic parsing to understand the structure and semantics of the user's message. NLU helps the system comprehend the user's intent and extract relevant information from their input.

NLG, on the other hand, focuses on generating human-like responses. It involves techniques such as text generation, summarization, and paraphrasing to produce coherent and contextually appropriate responses. NLG ensures that the system's output is not only accurate but also natural-sounding and engaging.

Dialogue management plays a crucial role in Conversational AI by maintaining the flow of conversation. It involves techniques such as state tracking, context management, and response selection. Dialogue management ensures that the system can maintain context, handle multi-turn conversations, and generate appropriate responses based on the current dialogue state.

Sentiment analysis is another important aspect of Conversational AI. It involves analyzing the sentiment or emotion expressed in user inputs or system responses. Sentiment analysis helps the system understand the user's emotional state and respond accordingly, enhancing the overall conversational experience.

Example:

Let's consider an example of Conversational AI in action. Imagine a virtual assistant designed to help users book flights. A user interacts with the assistant through a chat interface, providing their travel details and preferences. The Conversational AI system utilizes NLU techniques to understand the user's intent and extract relevant information such as the departure city, destination, travel dates, and preferred airlines.

Once the system has gathered the necessary information, it uses dialogue management techniques to maintain the conversation flow. It may ask follow-up questions to clarify ambiguous inputs or provide suggestions based on the user's preferences. The system then utilizes NLG techniques to generate responses that inform the user about available flights, prices, and other relevant details.

During the conversation, the system also employs sentiment analysis to gauge the user's satisfaction or frustration. If the user expresses dissatisfaction or confusion, the system can adapt its responses accordingly, providing additional assistance or clarifications.

Overall, Conversational AI in NLP enables the virtual assistant to engage in a dynamic and interactive conversation with the user, mimicking human-like interactions and providing a personalized and efficient experience.

Emotion Detection

Introduction:

Emotion detection in Natural Language Processing (NLP) is a technique that aims to identify and understand the emotions expressed in text data. It involves analyzing the linguistic and contextual cues present in the text to determine the underlying emotional state of the author. Emotion detection has gained significant attention in recent years due to its potential applications in various fields, including sentiment analysis, customer feedback analysis, mental health monitoring, and chatbot development.

Explanation:

Emotion detection in NLP typically involves two main steps: feature extraction and emotion classification. In the feature extraction phase, various linguistic and contextual features are extracted from the text, such as word choice, sentence structure, punctuation, and the presence of emoticons or emojis. These features provide valuable insights into the emotional content of the text.

Once the features are extracted, the next step is emotion classification. This involves training a machine learning model using labeled data, where each text sample is associated with a specific emotion category. The model learns to recognize patterns and relationships between the extracted features and the corresponding emotions. Common emotion categories include happiness, sadness, anger, fear, surprise, and disgust.

Example:

Let's consider an example to illustrate how emotion detection works in NLP. Suppose we have the following text:

"I am so excited about my upcoming vacation! I can't wait to relax on the beach and enjoy the beautiful scenery."

In this example, the emotion detection model would analyze the text and extract features such as the use of positive words ("excited," "enjoy," "beautiful"), exclamation marks, and the mention of a vacation. Based on these features, the model would classify the text as expressing the emotion of happiness or excitement.

Another example:

"I am devastated by the news of the natural disaster. My thoughts and prayers go out to all the affected families."

In this case, the model would identify features such as the use of negative words ("devastated," "disaster"), the expression of sympathy, and the mention of affected families. Based on these features, the model would classify the text as expressing the emotion of sadness or empathy.

Emotion detection in NLP can be further enhanced by considering the context, such as the overall sentiment of the text or the author's historical data. This allows for a more nuanced understanding of emotions and can provide valuable insights into the emotional state of individuals or groups.

In conclusion, emotion detection in NLP is a powerful technique that enables machines to understand and interpret the emotional content of text data. By accurately identifying emotions, it opens up possibilities for developing more empathetic AI systems, improving customer experiences, and gaining deeper insights into human behavior and sentiment.

Style Transfer

Advanced style transfer techniques modify the writing style of a given text while retaining its original meaning, which can be useful for adapting content to different audiences or purposes.

These advanced NLP techniques often involve the use of deep learning models like Recurrent Neural Networks (RNNs), Transformers, and BERT, among others. They enable more sophisticated and human-like interactions with text data, opening up possibilities for applications in various domains such as customer service, content generation, healthcare, finance, and more.

Please note that the categorization of techniques as basic or advanced can vary depending on the context and the level of expertise of the practitioners. Basic techniques are often fundamental for most NLP tasks and are generally introduced at the beginning stages of learning NLP. Advanced techniques typically involve more complex concepts and are commonly used for more specialized or in- depth NLP tasks.

Introduction:

In the field of Natural Language Processing (NLP), style transfer refers to the process of modifying the style or tone of a given text while preserving its original content. This technique has gained significant attention in recent years due to its potential applications in various domains, including creative writing, content generation, and personalized communication. Style transfer in NLP involves leveraging advanced machine learning algorithms to transform the linguistic style of a text, allowing users to customize the tone, formality, or sentiment of their written content.

Explanation:

Style transfer in NLP typically involves two main steps: style extraction and style application. In the style extraction phase, the underlying style or attributes of a given text are identified and separated from its content. This is achieved by training machine learning models on large datasets that contain texts with different styles. These models learn to recognize and extract specific stylistic features such as sentiment, formality, or even the writing style of a particular author.

Once the style has been extracted, it can be applied to another text during the style application phase. This is done by using the extracted style information to modify the target text while preserving its original content. Various techniques can be employed for style transfer, including rule-based methods, statistical models, and more recently, deep learning approaches.

Example:

Let's consider an example to illustrate style transfer in NLP. Suppose we have a formal email that needs to be transformed into a more casual and friendly tone. The original email might look like this:

Dear Mr. Johnson,

I am writing to inquire about the status of my job application.

I would appreciate it if you could provide me with an update regarding the hiring process.

Thank you for your attention to this matter.

Sincerely,

John Smith

To transfer the style of this email to a more casual tone, the style extraction phase would identify the formal attributes such as the use of "Dear Mr. Johnson" and "Sincerely." The style application phase would then modify the text to match the desired style, resulting in something like:

Hey Mr. Johnson,

Just wanted to check in on my job application.

Any chance you could give me an update on the hiring process?

Thanks a bunch for your help!

Cheers,

John

In this example, the style transfer technique has successfully transformed the formal email into a more casual and friendly tone while preserving the original content and intent of the message.

Overall, style transfer in NLP offers a powerful tool for customizing the style and tone of written content, enabling users to adapt their communication to different contexts and audiences.

Parsing

To process a sentence in a natural language by machine, it must be represented in a appropriate form in the computer. Parsing helps us convert a sentence to this appropriate form.

The main goal of parsing is to discover the syntactic structure of a text by examining it constituent words. This is done in the context of the underlying grammar (Huawei, 2019).

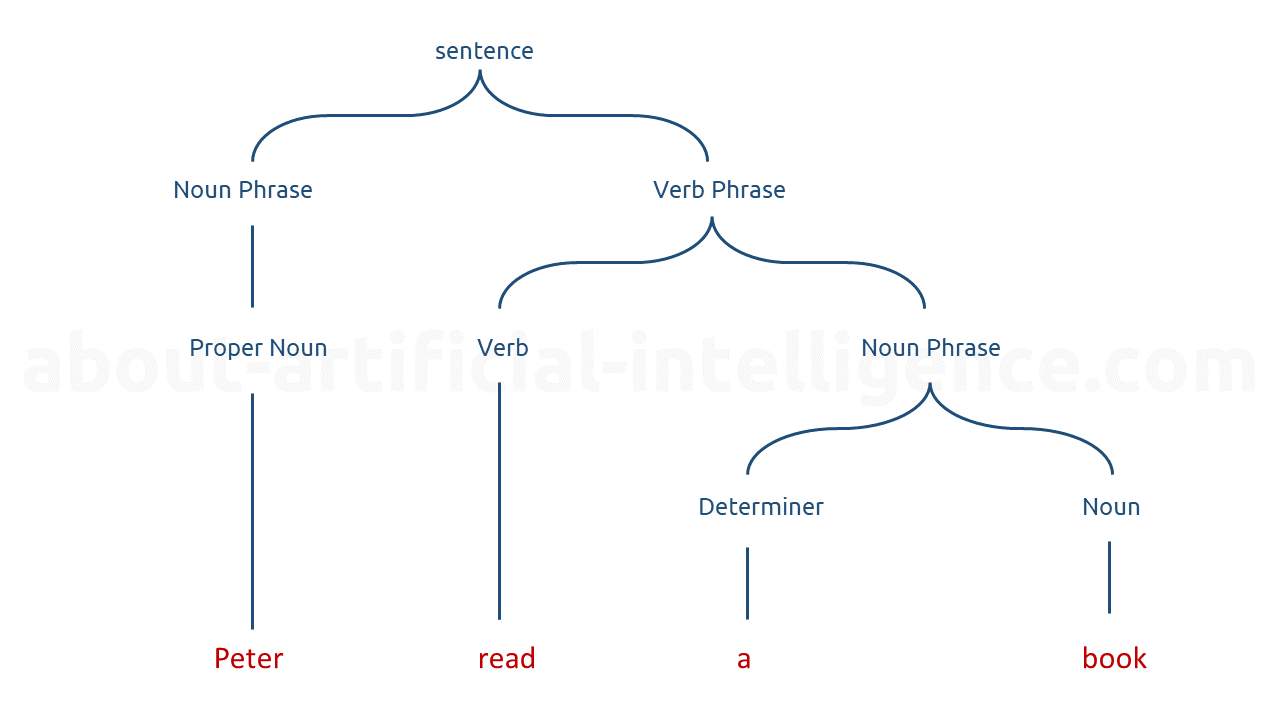

Consider the following example, in which the purpose is to parse the sentence "Peter read a book," in order to show how the parsing process works. When the parsing process is completed, the result is a parse tree that looks something like this: The root of the tree is the sentence; the intermediate level contains nodes such as noun phrase, verb phrase, etc. - they are therefore called non- terminals. Finally, the leaves of the tree, which are also called terminals, are such as "Peter", "read", "a" and "book".

The following diagram shows the parsing result:

@fig:an-example-of-pars-tree

The majority of existing parsing algorithms are based on statistical, probabilistic, and machine learning principles.

introduce and explain XXXXXX in NLP. give an example for it

Shallow Parsing

A shallow parser often referred to as a "chunker", is a type of parser that lies between POS taggers and full parsers. Compared to a simple POS tagger, which is very fast but does not provide syntactic structure, a full parser is slow and does not provide nearly enough information.

A POS tagger can be thought of as a parser that only provides the bottom level of the parse tree. A shallow parser can be thought of as a parser that returns one more level of parse tree compared to POS tagger.

In some instances, it is sufficient to know that a group of words forms a noun phrase; for example, you are not interested in the substructure of the tree contained in those words (i.e., which words are adjectives, determiners, nouns, etc., and how they are combined). Therefore, you can use a shallow parser to extract only the information you need, rather than spending valuable time trying to build the entire parse tree for the phrase.