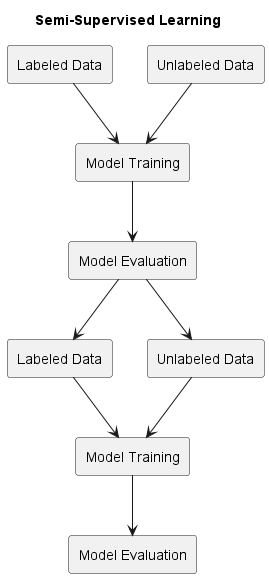

Semi-Supervised Learning

@fig:semi-supervised-learning

Semi-supervised learning falls between supervised learning and unsupervised learning. It has been shown that unlabeled data, when used in conjunction with a small amount of labeled data, can considerably improve learning accuracy. Labeled data is often generated by a skilled human agent who manually classifies training examples. The cost of labeling process is sometimes unaffordable, whereas generation of unlabeled data is relatively inexpensive. In such situations, semi-supervised learning can be a better approach.

The following semi-supervised learning methods belong to the set of most commonly applied machine learning methods:

- Self-training

- Co-training

Self-Training

Self-Training (also known as Bootstrapping) is probably the oldest semi-supervised learning method. The idea behind Self-Training is simple: use a seed set of labeled data to label a set of unlabeled data, which can be partly added in turn to the set of labeled data for retraining. The process may be continued until a stopping condition is reached.

procedure \(SelfTrain(L_0,U)\)

- \(L_0\) is seed labeled data, \(L\) is labeled data

- \(U\) is unlabeled data

- \(classifier \leftarrow train(L_0)\)

- \(L \leftarrow L_0\) + \(select(label(U,classifier))\)

- \(classifier \leftarrow train(L)\)

- if no stopping criterion is met, go to step 4

- return \(classifier\)

the above procedure shows a basic form of Self-Training. The \(train\) function is a supervised classifier that is called base leaner. It is assumed that the classifier (base learner) makes confident weighted predictions. The \(select\) function selects those instances where it is most confident.

One simple method that can be used as a stopping criterion is to repeat the steps 4-6 for a fixed, arbitrary number of rounds. Another simple method is to keep it running until convergence, i.e. until the labeled data and classifier stop changing.

Co-Training

Co-training is a semi-supervised method that requires two views of the data. In other words, it assumes that each instance is described using two different feature sets. The two feature sets are to provide different, but complementary information about the instances.

procedure \(CoTrain(L,U)\)

- \(L\) is labeled data

- \(U\) is unlabeled data

- \(P \leftarrow\) random selection from \(U\)

- \(f_1 \leftarrow train(view_1(L))\)

- \(f_2 \leftarrow train(view_2(L))\)

- \(L \leftarrow L\) + \(select(label(P,f_1))\) + \(select(label(P,f_2))\)

- Remove the labeled instances from \(P\)

- $P \leftarrow P + $ random selection from \(U\)

- if no stopping criterion is met, go to step 4

the above procedure shows a basic form of Co-Training. As in Self-Training, the \(train\) function is a supervised classifier with the assumption that it makes confident weighted predictions. Also the \(select\) function selects those instances where it is most confident. The stopping criteria that were discussed for Self-Training are applicable for Co-training without modification.

The above mentioned SelfTrain and CoTrain procedures suggest strong similarities between co-training and self-training. If the classifier pair \((f_1, f_2)\) is considered a classifier with an internal structure, then Co-training can be seen as a special case of Self-Training.

Bootstrapping

Bootstrapping is a semi-supervised machine learning technique that combines the strengths of both supervised and unsupervised learning methods. In bootstrapping, a small amount of labeled data is used to generate predictions for the remaining unlabeled data. These predictions are then used to add additional labeled data to the original set, and the process is repeated until the desired accuracy is achieved or a stopping criterion is met.

The mathematical formulation of bootstrapping can be described as follows:

Given a set of \(N\) instances \((x_1, y_1), ..., (x_N, y_N)\), where \(x_i\) is a feature vector and \(y_i\) is the corresponding label, the goal of bootstrapping is to predict the labels for the remaining unlabeled instances \(x_j, j = 1, ..., M\).

- Initialization: Select a small number of instances \((k << N)\) at random and use these instances as the labeled set. Train a classifier using these instances.

- Prediction: Use the trained classifier to predict the labels for the remaining instances.

- Update: Select the most uncertain instances (based on the confidence of the classifier's prediction) and add their labels to the labeled set.

- Repeat: Repeat steps 2 and 3 until the desired accuracy is achieved or a stopping criterion is met.

In essence, bootstrapping iteratively refines the labeled set, gradually increasing the size of the labeled set and the accuracy of the classifier until a satisfactory level of performance is achieved. This semi-supervised approach can be particularly useful when labeled data is scarce, as it allows the classifier to leverage the information contained in the unlabeled data.

Tri-training

Tri-training is a semi-supervised machine learning approach that leverages unlabeled data to improve the accuracy of classification models. Tri-training works by training three classifiers, each of which is trained on a different subset of the data. The outputs of these three classifiers are then combined to form a prediction for each data point.

Mathematically, Tri-training works by first randomly splitting the dataset into three distinct datasets, \(A\), \(B\) and \(C\). A classifier is then trained on each of these datasets. These classifiers are denoted by \(C_A\), \(C_B\), and \(C_C\), respectively. Once the training is complete, the outputs of each classifier are combined to form a prediction for each data point. This is done by assigning a label to each data point based on the majority vote of the three classifiers (i.e. the label with the highest frequency of occurrence among the three classifiers is chosen).

Finally, the labeled data generated in this manner is used to retrain each of the three classifiers. This process is repeated iteratively until the accuracy of the classifiers converges.

Formally, Tri-training can be expressed as follows:

Let \(S\) be the dataset of input data points, each with a corresponding label \(y\).

Randomly split \(S\) into three datasets \(A\), \(B\), and \(C\).

- Train a classifier \(C_A\) on dataset \(A\).

- Train a classifier \(C_B\) on dataset \(B\).

- Train a classifier \(C_C\) on dataset \(C\).

For each data point in S, obtain the predictions from \(C_A\), \(C_B\), and \(C_C\).

Assign a label to each data point based on the majority vote of the predictions of \(C_A\), \(C_B\), and \(C_C\).

Retrain each of the three classifiers with the labeled data.

Repeat the process until the accuracy of the classifiers converges.

Label Propagation

Label Propagation is a semi-supervised machine learning method that uses graph-based techniques to propagate labels from a small number of labeled instances to a larger set of unlabeled instances. The main idea behind Label Propagation is to construct a graph that represents the similarity between instances, and then use the labeled instances to guide the labeling of the unlabeled instances.

The mathematical formulation of Label Propagation can be described as follows:

Given a set of \(N\) instances \((x_1, y_1), ..., (x_N, y_N)\), where \(x_i\) is a feature vector and \(y_i\) is the corresponding label, the goal is to predict the labels for the remaining unlabeled instances \(x_j, j = 1, ..., M\).

- Similarity Matrix: Construct a similarity matrix W that represents the similarity between instances. The elements of the similarity matrix W_{i, j} can be calculated using a distance metric such as Euclidean distance, Cosine similarity, or another appropriate similarity measure.

- Label Propagation: Initialize the labels for the unlabeled instances with a prior distribution, such as a uniform distribution. Then, iteratively update the labels for the unlabeled instances using the following update rule: \(\(y_j = (1 - λ) * y_j + λ * sum_{i=1}^N W_{j, i} * y_i\)\) where \(λ\) is a damping factor that controls the rate of convergence.

- Repeat: Repeat step 2 until the labels converge or a stopping criterion is met.

In essence, Label Propagation uses graph-based techniques to propagate labels from the labeled instances to the unlabeled instances. This semi-supervised approach can be particularly useful when labeled data is scarce, as it allows the classifier to leverage the information contained in the unlabeled data. The similarity matrix \(W\) provides a measure of the relationships between instances, which can be used to guide the labeling process.

MixMatch

MixMatch is a semi-supervised learning technique that aims to improve the accuracy of supervised learning models using a combination of labeled and unlabeled data. The basic idea behind MixMatch is to generate augmented versions of the labeled and unlabeled data samples, and then match them to produce a more diverse and informative training set.

The following is an overview of the MixMatch algorithm:

- Data Augmentation: MixMatch begins by applying data augmentation techniques to both labeled and unlabeled data samples to create a set of augmented samples.

- Pseudo-Labeling: Next, the algorithm uses the labeled samples to train a supervised model. This model is then used to predict the labels for the unlabeled samples. These predicted labels are known as pseudo-labels.

- Mix-Up: The labeled and unlabeled samples are mixed together in a process called Mix-Up. In Mix- Up, pairs of samples are randomly selected from both labeled and unlabeled samples, and the features and labels of these pairs are linearly combined to create new samples. This process encourages the model to learn from the unlabeled data while preventing overfitting to the labeled data.

- Consistency Regularization: To further encourage the model to learn from the unlabeled data, MixMatch applies consistency regularization. This involves computing the loss for the original and augmented versions of each unlabeled sample and encouraging the model to produce similar predictions for both versions. This helps to reduce the noise in the pseudo-labels and improves the accuracy of the model.

- Final Training: Finally, the model is trained using the augmented samples generated through Mix- Up and consistency regularization. This process helps to improve the generalization of the model, and the resulting model can be used for classification tasks.

In essence, MixMatch combines several techniques to leverage the power of both labeled and unlabeled data, allowing for improved accuracy in semi-supervised learning tasks.