Basic NLP Techniques

Modern Natural Language Processing (NLP) algorithms are designed to handle various tasks when processing natural texts. The initial phase often involves segmenting the text into individual sentences and subsequently breaking down those sentences into separate words. This segmentation is straightforward in languages like English, German, or French, where sentences are separated by spaces and individual words are well-defined.

However, as the text proceeds through the processing pipeline, more intricate tasks become essential. A notable example is part-of-speech tagging, which entails assigning tags, such as Noun, Pronoun, or Verb, to each of the individual terms. This process involves associating the appropriate grammatical categories with the words, enabling the algorithm to understand the syntactic structure of the text.

Considering the complexity of natural language, it is not surprising that there is a large variety of natural language processing approaches available. Any natural language processing problem can be handled by following a systematic workflow (known as NLP Pipeline) that involves a set of steps.

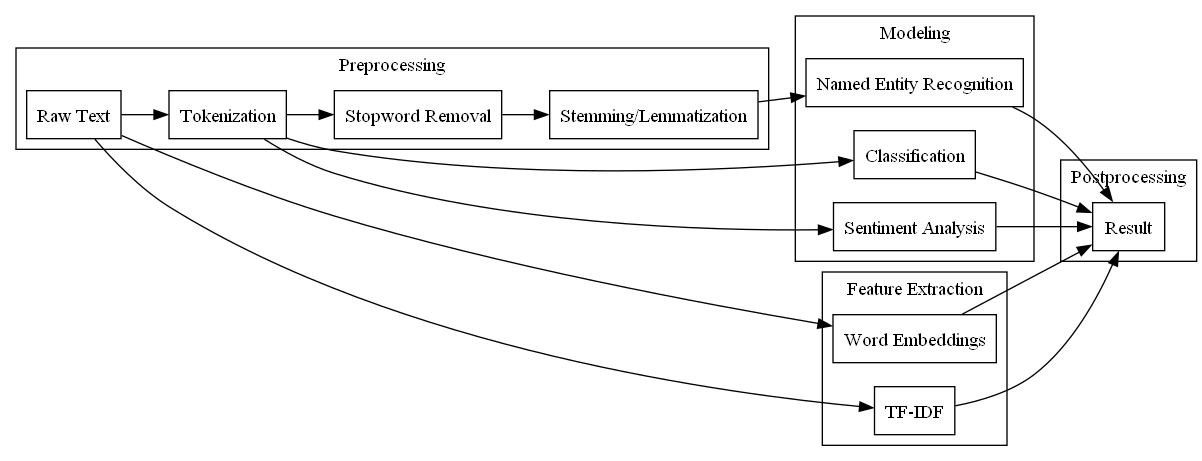

natural-language-processing-pipeline represents a simplified example of a NLP pipeline. It illustrates the various stages that text data goes through to undergo preprocessing, feature extraction, modeling, and postprocessing in order to perform different NLP tasks. Here's an explanation of each component in the diagram:

Preprocessing:

- Raw Text: This is the input text data, which is the starting point of the NLP pipeline.

- Tokenization: The raw text is split into individual words or tokens, making it easier for further analysis.

- Stopword Removal: Common words that don't carry significant meaning (such as "the," "and," "is") are removed from the tokenized text.

- Stemming/Lemmatization: Words are reduced to their base or root form, helping to normalize variations of words (e.g., "running" to "run").

Feature Extraction:

- Word Embeddings: The tokenized text is transformed into numerical vectors using word embedding techniques, capturing semantic relationships between words.

- TF-IDF: Term Frequency-Inverse Document Frequency is calculated to represent the importance of words in the context of a document collection.

Modeling:

- Classification: Tokenized and preprocessed text is used to train and apply classification models that assign labels or categories to text (e.g., classifying emails as spam or not spam).

- Sentiment Analysis: Analyzing the sentiment expressed in text to determine whether it's positive, negative, or neutral.

- Named Entity Recognition: Identifying and classifying named entities (such as names, locations, dates) within the text.

Postprocessing:

- Result: The outcomes of various NLP tasks are obtained, such as classification labels, sentiment scores, and identified named entities.

The arrows between components indicate the flow of data and the sequence of processing. For instance:

- Raw text is first tokenized, then goes through stopword removal and stemming/lemmatization.

- Tokenized text can be used for both classification and sentiment analysis tasks.

- The stemmed/lemmatized text is used for named entity recognition.

- The raw text is also used for word embeddings and TF-IDF calculations.

- The results from the modeling stage contribute to the final results.

Keep in mind that real-world NLP pipelines can be more complex and involve additional steps, especially in advanced applications. This diagram provides a high-level overview of the stages involved in processing text data and extracting valuable information using NLP techniques.

There are some basic natural language processing techniques that are commonly used for pre- and post-processing in NLP pipelines, which are usually a chain of data processing elements. In this section, we will review some of these basic NLP techniques, as follows.

Tokenization

Tokenization is a fundamental preprocessing step in NLP that involves breaking down a text or a sequence of characters into smaller units, typically words or subword units, referred to as "tokens." These tokens serve as the basic building blocks for subsequent NLP tasks, such as text analysis, sentiment analysis, machine translation, and more. Tokenization is crucial because it converts unstructured text data into a structured format that can be easily processed by algorithms.

Here's how tokenization works:

-

Input Text: Tokenization begins with an input text, which can be a sentence, paragraph, or an entire document. For example, consider the sentence: "I love natural language processing."

-

Tokenization Process: The text is then divided into tokens based on specific rules or patterns. In English, these rules often involve splitting text at spaces and punctuation marks while preserving meaningful units. Applying tokenization to our example sentence would result in the following tokens:

-

"I"

- "love"

- "natural"

- "language"

-

"processing"

-

Token List: The output of tokenization is typically a list or an array of tokens. In this case, the list of tokens would be: ["I", "love", "natural", "language", "processing"].

Tokenization can be more complex in languages with agglutinative or morphologically rich structures, such as German or Finnish. In these languages, words can be longer and consist of multiple meaningful units or morphemes. Tokenizers often need to handle these cases to provide meaningful tokens.

Tokenization is a critical step because it allows NLP models to work with text in a way that captures the semantic and syntactic structures of language. For instance, in text classification, each token can be treated as a feature, making it possible to analyze the presence or absence of specific words or phrases. Tokenization also enables the creation of word embeddings, which are dense vector representations of words, used in various NLP tasks.

Tokenization in NLP is the process of splitting text into individual units (tokens) that serve as the basis for further analysis. It helps convert unstructured text into structured data, making it accessible for various computational tasks.

Stop Word Removal

Stop word removal is a common preprocessing step in Natural Language Processing (NLP) that involves the elimination of words that are considered to be of little or no value in text analysis. These words, known as "stop words," are typically very common words in a language, such as articles (e.g., "the," "an," "a"), prepositions (e.g., "in," "on," "at"), conjunctions (e.g., "and," "but," "or"), and pronouns (e.g., "he," "she," "it").

The primary purpose of removing stop words is to reduce the dimensionality of text data and improve the efficiency and effectiveness of downstream NLP tasks. Stop words often occur frequently in text but carry minimal semantic meaning. By removing them, the remaining words become more meaningful, and the computational resources required for processing and storage are reduced.

Here's how stop word removal works:

-

Input Text: Start with a piece of text that you want to process, such as a sentence, document, or a collection of text.

-

Stop Word List: Utilize a predefined list of stop words for the language you are working with. Common libraries and NLP tools provide these lists. For English, this list typically includes words like "the," "and," "is," "in," "to," etc.

-

Stop Word Removal: Compare each word in the input text to the stop word list. If a word matches any of the stop words, it is removed from the text. Words that do not match stop words are retained. The result is a text with stop words removed.

Example

Input Text: "The quick brown fox jumps over the lazy dog."

Stop Word List (for English): ["the", "a", "an", "in", "on", "to", "over", "and"]

After Stop Word Removal: quick brown fox jumps lazy dog.

In this example, the stop words "the" and "over" have been removed from the input text. The remaining words are considered to be more meaningful in terms of conveying the essence of the text.

Stop word removal is especially useful in tasks like text classification, sentiment analysis, and information retrieval, where the focus is on identifying important terms or features in the text. However, it's essential to note that the list of stop words may vary depending on the specific NLP task and domain, and some applications may choose to retain certain stop words if they are relevant to the analysis.

Stemming

Stemming is a text normalization technique in Natural Language Processing (NLP) that aims to reduce words to their base or root form, known as the "stem." The primary goal of stemming is to simplify word variations and capture the core meaning of words, which can improve text analysis and information retrieval in various NLP applications.

In English and many other languages, words can take different forms due to inflections, prefixes, suffixes, and tense variations. For instance, the word "jumping" can have variations like "jumps," "jumped," and "jumper." Stemming algorithms work by removing these affixes and mapping these variations to a common root word. Stemming does not always produce valid words but is focused on reducing words to a common linguistic form.

Here's how stemming works:

-

Input Word: Start with an input word, which can be any word from a text document or sentence.

-

Stemming Algorithm: Apply a stemming algorithm or rule-based process that identifies and removes common prefixes and suffixes from the word. These rules are designed to map variations of a word to a common stem.

-

Stemmed Word: The result of the stemming process is the stemmed or normalized word, which represents the core meaning of the original word.

Example:

Input Word: "jumping"

After Stemming: "jump"

In this example, the word "jumping" has been stemmed to its base form "jump" by removing the "-ing" suffix.

It's important to note that stemming algorithms may not always produce perfect stems, and the resulting stems may not be valid words. However, stemming is effective in reducing word variations, which can be valuable in information retrieval tasks, search engines, and text analysis where the focus is on identifying common themes and keywords.

There are various stemming algorithms available, with the Porter stemming algorithm being one of the most well-known for the English language. Other languages may have their specific stemming algorithms tailored to their linguistic rules and structures.

Stemming is particularly useful in cases where word variations can lead to increased data sparsity and complexity. However, it's not always appropriate for all NLP tasks, as it can sometimes oversimplify words and lose context. In some cases, lemmatization, which aims to reduce words to their base or dictionary form (lemma), may be a more suitable text normalization technique.

Lemmatization

Lemmatization is a text normalization technique in Natural Language Processing (NLP) that focuses on reducing words to their base or dictionary form, known as the "lemma." Unlike stemming, which uses heuristic rules to cut off prefixes or suffixes from words, lemmatization employs a more sophisticated approach that considers a word's meaning and context to transform it to its most basic form. The primary goal of lemmatization is to ensure that the resulting word, or lemma, is a valid word found in the language's lexicon.

Here's how lemmatization works:

-

Input Word: Start with an input word, which can be any word from a text document or sentence.

-

Lemmatization Algorithm: Apply a lemmatization algorithm or dictionary-based process that identifies the word's lemma based on linguistic rules and a vocabulary database (lexicon). These algorithms consider part-of-speech (POS) tags to determine the word's grammatical category (e.g., noun, verb, adjective) and then map the word to its corresponding lemma.

-

Lemmatized Word: The result of the lemmatization process is the lemmatized word, which represents the canonical or dictionary form of the original word.

Example:

Input Word: "running" (verb)

After Lemmatization: "run" (verb)

In this example, the word "running" has been lemmatized to its base form "run" as a verb.

Lemmatization is more precise and context-aware compared to stemming, as it ensures that the resulting words are valid and maintain their grammatical and semantic integrity. This makes lemmatization particularly useful in tasks where word meanings and context are crucial, such as information retrieval, question answering, and sentiment analysis.

Furthermore, lemmatization provides a clearer picture of word relationships and can be helpful for tasks like language understanding and machine translation, where preserving the original word's meaning is essential.

Lemmatization algorithms often rely on linguistic databases or resources to map words to their lemmas accurately. Libraries and tools for NLP, such as NLTK (Natural Language Toolkit) in Python, provide lemmatization capabilities for various languages.

Spell Checking

Spell checking is a crucial component of Natural Language Processing (NLP) that focuses on identifying and correcting misspelled words in text. The primary objective of spell checking is to improve the accuracy and readability of text, ensuring that words are correctly spelled and, when possible, suggesting valid replacements for misspelled words.

Here's how spell checking works:

-

Input Text: Begin with a piece of text that may contain one or more misspelled words. These misspellings can occur due to typographical errors, unintentional keystrokes, or a lack of familiarity with the correct spelling.

-

Dictionary or Lexicon: Spell checking relies on a dictionary or lexicon that contains a comprehensive list of correctly spelled words in the language being used. This lexicon serves as a reference for determining whether a word in the input text is spelled correctly.

-

Word Comparison: Each word in the input text is compared to the words in the dictionary. If a word from the input text is not found in the dictionary, it is flagged as a potential misspelling.

-

Correction Suggestions: For each misspelled word, the spell checker may provide a list of suggested corrections based on the similarity between the misspelled word and words in the dictionary. This similarity can be measured using techniques like edit distance or phonetic similarity.

-

User Intervention: In some cases, the spell checker may require user intervention to select the correct replacement word from the list of suggestions. Users can review and choose the most appropriate correction.

Example:

Input Text: "I have an appple."

In this example, "appple" is a misspelled word. The spell checker identifies it as a potential misspelling and suggests a correction:

Suggested Correction: "I have an apple."

Spell checkers can be implemented as standalone applications, integrated into word processors, or used as part of larger NLP pipelines. They are essential for ensuring the correctness of text in various applications, including document editing, web forms, email clients, and more.

Advanced spell checkers also take context into account. For instance, they may consider adjacent words or grammatical structures to provide more accurate suggestions. Additionally, spell checkers often support multiple languages and can be customized to include domain-specific terms and jargon.

Spell checking plays a vital role in enhancing the quality and professionalism of written communication by identifying and rectifying spelling errors, thereby improving the overall user experience and text reliability.

Sentence Recognition

Sentence recognition, also known as sentence boundary detection or sentence segmentation, is a fundamental task in Natural Language Processing (NLP) that involves identifying and splitting a continuous text into individual sentences. The goal is to determine the boundaries that separate one sentence from another within a given text or document.

Sentence recognition is important because it enables downstream NLP tasks to operate on individual sentences, making it easier to analyze and process textual data. Without accurate sentence recognition, it would be challenging to perform tasks like text summarization, sentiment analysis, machine translation, or named entity recognition effectively.

Here's how sentence recognition works:

-

Input Text: Begin with a block of text or a document that may contain one or more sentences. Sentences are typically separated by punctuation marks such as periods, exclamation marks, and question marks.

-

Boundary Detection: The sentence recognition algorithm scans through the input text and identifies potential sentence boundaries based on punctuation marks. It also considers context to avoid splitting text inappropriately.

-

Segmentation: Once potential sentence boundaries are detected, the algorithm segments the text into individual sentences, creating a list of sentences as output. Each sentence is considered a distinct unit of text.

Example:

Input Text: "Natural language processing (NLP) is a fascinating field. It involves the development of algorithms for understanding and generating human language. NLP has applications in machine translation, sentiment analysis, and chatbots."

In this example, sentence recognition would identify the sentence boundaries as follows:

- "Natural language processing (NLP) is a fascinating field."

- "It involves the development of algorithms for understanding and generating human language."

- "NLP has applications in machine translation, sentiment analysis, and chatbots."

Sentence recognition algorithms need to account for various linguistic complexities, such as abbreviations, acronyms, numbers, and punctuation marks within sentences. They also consider context, ensuring that abbreviations like "Mr." and "Dr." do not trigger false sentence boundaries.

Sentence recognition is typically one of the initial preprocessing steps in many NLP pipelines. It simplifies subsequent text analysis tasks by breaking down text into manageable units, allowing NLP models to focus on individual sentences and extract meaningful information or insights from them.

Part-of-Speech Tagging

In part-of-speech tagging (POS tagging), each word in a text is assigned a unique part of speech by considering, on the one hand, the definition of the term with the help of a lexicon and, on the other hand, the words immediately around the word in the task. There are several tag sets available for this word type annotation, which we refer to as a tag. Depending on the language used and the level of accuracy necessary for the description, several classifications can be created. There are several main categories such as noun, verb, adjective, adverb, preposition. These main categories are then further subdivided into e.g., imperative, infinitive, or participles. The standard tag set for English is the Penn Treebank tag set with 36 POS tags, which we will get to know briefly in the following table (Liberman, 2003), (sketchengine , 2021).

| Tag | Description | Example |

|---|---|---|

| CC | Coordinating conjunction | and, but, or |

| CD | Cardinal number | one, two, three |

| DT | Determiner | a, the |

| EX | Existential there | there |

| FW | Foreign word | mea culpa |

| IN | Preposition, subordinating conjunction | of, in, by |

| JJ | Adjective | yellow |

| JJR | Adjective, comparative | bigger |

| JJS | Adjective, superlative | wildest |

| LS | List item marker | 1, 2, One |

| MD | Modal | can, should |

| NN | Noun, singular or mass | house |

| NNS | Noun, plural | houses |

| NNP | Proper noun, singular | IBM |

| NNPS | Proper noun, plural | Carolinas |

| PDT | Predeterminer | all, both |

| POS | Possessive ending | 's |

| PRP | Personal pronoun | I, you, he |

| PRP$ | Possessive pronoun | your, one's |

| RB | Adverb | quickly, never |

| RBR | Adverb, comparative | faster |

| RBS | Adverb, superlative | fastest |

| RP | Particle | up, off |

| SYM | Symbol | +, %, & |

| TO | to | to |

| UH | Interjection | ah, oops |

| VB | Verb, base form | eat |

| VBD | Verb, past tense | ate |

| VBG | Verb, gerund or present participle | eating |

| VBN | Verb, past participle | eaten |

| VBP | Verb, non-3rd person singular present | eat |

| VBZ | Verb, 3rd person singular present | eats |

| WDT | Wh-determiner | which, that |

| WP | Wh-pronoun | what, who |

| WP$ | Possessive wh-pronoun | whose |

| WRB | Wh-adverb | how, where |

Table: Penn Treebank Tag Set.

There are many different types of taggers, each with its manner of operation, often integrating several different methodologies.

The majority of taggers work in a few stages: To begin, tokenization is performed, which is the process of breaking down a text into distinct tokens. Finally, when there are any ambiguities, the proper tag is chosen using rules or probability models, as well as context, and the tokens are tagged as a result of this process. The tagger obtains the occurrence probabilities of the word and the associated POS tag from a previously annotated training corpus on which the tagger was trained before use. Thus, it stores the information contained in the corpus in the form of rules, probabilities, etc., based on which the decisions about POS tags are then made.

N-grams

N-grams are contiguous sequences of n items, typically words or characters, extracted from a text or speech. In Natural Language Processing (NLP), n-grams are used to represent and analyze the linguistic structure and patterns of text. The "n" in n-grams represents the number of items (words, characters, or tokens) in each sequence.

Here's how n-grams work:

-

Tokenization: Begin with the process of tokenization, where the text is split into individual units, which can be words, characters, or subword units (subtokens).

-

Creation of N-grams: N-grams are created by sliding a window of fixed size "n" over the tokenized text. At each position of the window, a new n-gram is formed by capturing the next "n" items in the sequence.

-

Analysis and Application: N-grams are used for various NLP tasks, including text analysis, language modeling, and feature extraction. They can capture patterns, relationships between words, and context within the text.

Common types of n-grams include:

-

Unigrams (1-grams): These are single words. For example, in the sentence "I love NLP," the unigrams are "I," "love," and "NLP."

-

Bigrams (2-grams): These consist of pairs of consecutive words. In the same sentence, the bigrams would be "I love" and "love NLP."

-

Trigrams (3-grams): These are sequences of three consecutive words. In the sentence, the trigrams include "I love NLP."

-

4-grams, 5-grams, and so on: These consist of sequences of four, five, or more consecutive words, capturing longer patterns in the text.

N-grams are valuable in NLP for several reasons:

-

Language Modeling: N-grams are used to build language models that estimate the likelihood of a sequence of words. This is essential in tasks like machine translation, speech recognition, and auto-completion.

-

Text Classification: N-grams can be used as features in text classification tasks. For example, in sentiment analysis, bigrams or trigrams might reveal important sentiment-carrying phrases.

-

Information Retrieval: In information retrieval systems and search engines, n-grams can help find documents or passages that match a given query by considering the sequence of words.

-

Text Generation: N-grams are used in text generation models like Markov chains, where the next word is predicted based on the preceding n-gram.

Example:

Consider the sentence: "The quick brown fox jumps over the lazy dog."

- Unigrams: ["The", "quick", "brown", "fox", "jumps", "over", "the", "lazy", "dog."]

- Bigrams: ["The quick", "quick brown", "brown fox", "fox jumps", "jumps over", "over the", "the lazy", "lazy dog."]

- Trigrams: ["The quick brown", "quick brown fox", "brown fox jumps", "fox jumps over", "jumps over the", "over the lazy", "the lazy dog."]

N-grams help capture different levels of linguistic information. While unigrams are useful for basic analysis, bigrams and trigrams can reveal more complex patterns and relationships within the text, making them a versatile tool in NLP.

Bag of Words (BoW)

The Bag of Words (BoW) is a simple and widely used technique in Natural Language Processing (NLP) for representing text data as numerical features. It treats text as an unordered collection or "bag" of words, disregarding grammar, word order, and context. BoW represents the frequency of each word in a document, allowing text data to be used in machine learning models.

Here's how Bag of Words works:

-

Text Preprocessing: Begin by preprocessing the text data, which typically includes tokenization (splitting text into words or tokens), removing stop words, and other text cleaning steps.

-

Vocabulary Creation: Create a vocabulary or dictionary containing all unique words (or tokens) across the entire corpus of documents. Each unique word in the vocabulary is assigned a unique numerical index.

-

Feature Extraction: For each document in the corpus, create a vector representing the frequency of each word in the vocabulary. This vector is typically of fixed length, with each element corresponding to a word in the vocabulary. The value in each element indicates the number of times the corresponding word appears in the document.

-

Sparse Matrix: Since most documents only contain a subset of the words from the vocabulary, the resulting matrix is often sparse (i.e., it contains mostly zeros). This is a characteristic of BoW representations.

Example:

Consider a small corpus with three documents:

- Document 1: "I love natural language processing."

- Document 2: "NLP is fascinating."

- Document 3: "Text analysis and NLP are important."

Step 1 and 2: Text Preprocessing and Vocabulary Creation

Tokenization results in a vocabulary:

- ["I", "love", "natural", "language", "processing", "NLP", "is", "fascinating", "text", "analysis", "and", "are", "important"].

Step 3 and 4: Feature Extraction and Sparse Matrix

Create a BoW representation for each document:

- Document 1: [1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0]

- Document 2: [0, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 0]

- Document 3: [0, 0, 0, 0, 0, 1, 0, 0, 1, 1, 1, 1, 1]

Each vector represents the frequency of words in the corresponding document. For example, in Document 1, "I" appears once, "love" appears once, "natural" appears once, and so on.

BoW representations are straightforward to create and are used in various NLP tasks like text classification, sentiment analysis, and document retrieval. However, they do not capture word order or semantics, making them less suitable for tasks that require understanding the meaning and context of words in sentences.

TF-IDF

TF-IDF, which stands for Term Frequency-Inverse Document Frequency, is a statistical measure used in Natural Language Processing (NLP) to evaluate the importance of a word or term within a document relative to a collection of documents, often referred to as a "corpus." TF-IDF is widely used for text analysis, information retrieval, and document ranking. It helps identify words or terms that are distinctive and relevant within a specific document compared to the entire corpus.

Here's how TF-IDF works:

- Term Frequency (TF): This component measures how frequently a term occurs in a document. It reflects the importance of a term within a specific document. TF is calculated using the formula:

\(\(TF(t, d) = \frac{\text{Number of times term t appears in document d}}{\text{Total number of terms in document d}}\)\)

For example, if the term "apple" appears 5 times in a document with a total of 100 terms, the TF for "apple" in that document is 0.05.

- Inverse Document Frequency (IDF): IDF evaluates the importance of a term in the entire corpus by considering how frequently the term appears across all documents. The idea is to give higher weight to terms that are rare across the corpus. IDF is calculated using the formula:

\(\(IDF(t) = \log\left(\frac{\text{Total number of documents in the corpus}}{\text{Number of documents containing term t}}\right)\)\)

The logarithm is used to prevent the IDF value from becoming too large for very rare terms.

For example, if the term "apple" appears in 100 out of 1,000,000 documents, the IDF for "apple" is \(\log\left(\frac{1,000,000}{100}\right) \approx 4.61\).

- TF-IDF Score: The TF-IDF score for a term in a document combines both the term frequency (TF) and the inverse document frequency (IDF) to measure the importance of the term within that document and across the corpus. It is calculated as:

\(\(TF\text{-IDF}(t, d) = TF(t, d) \times IDF(t)\)\)

For example, if the TF for "apple" in a document is 0.05 and the IDF for "apple" is 4.61, the TF- IDF score for "apple" in that document is approximately 0.23.

TF-IDF helps in several NLP tasks:

-

Information Retrieval: TF-IDF is used to rank documents in search engines. Documents with higher TF-IDF scores for query terms are considered more relevant.

-

Text Summarization: TF-IDF can help identify and extract important sentences or phrases in a document to create summaries.

-

Document Clustering: TF-IDF is used to represent documents numerically, making it possible to group similar documents together.

-

Topic Modeling: TF-IDF is employed as input for topic modeling algorithms to discover themes in a corpus.

-

Keyword Extraction: TF-IDF is used to identify important keywords or terms within documents.

Example:

Consider a small corpus of two documents:

- Document 1: "I love apples."

- Document 2: "Apples are delicious fruits. I eat apples every day."

Let's calculate the TF-IDF scores for the term "apples" in both documents:

- TF for "apples" in Document 1: \(\frac{1}{3} \approx 0.33\)

- TF for "apples" in Document 2: \(\frac{3}{11} \approx 0.27\)

- IDF for "apples" (assuming there are 100 documents in the corpus, and "apples" appears in 10 documents): \(\log\left(\frac{100}{10}\right) = 1\)

Now, calculate the TF-IDF scores:

- TF-IDF for "apples" in Document 1: \(0.33 \times 1 = 0.33\)

- TF-IDF for "apples" in Document 2: \(0.27 \times 1 = 0.27\)

In this example, "apples" has a higher TF-IDF score in Document 1, indicating that it is relatively more important in Document 1 compared to Document 2.

Word Embeddings

Word embeddings are a representation of words in a continuous vector space, where words with similar meanings or contexts are closer to each other in the vector space. Word embeddings are a fundamental concept in Natural Language Processing (NLP) and are used to convert words into numerical vectors, making it possible for computers to understand and work with text data.

Here's how word embeddings work:

-

Word Representation: Each word in a vocabulary is represented as a high-dimensional vector, often with hundreds of dimensions. These vectors capture the semantic and syntactic relationships between words.

-

Learning from Context: Word embeddings are learned from large text corpora using machine learning techniques, such as neural networks (Word2Vec, FastText, GloVe) or transformer-based models (BERT, GPT). During training, the model considers the surrounding words or context words for each target word and adjusts the word vectors to minimize the prediction error for context prediction tasks.

-

Vector Space: The word vectors are placed in a continuous vector space, where each dimension corresponds to some linguistic property or relationship. Words with similar meanings or contexts will have vectors that are closer together in this space.

-

Applications: Word embeddings have a wide range of applications in NLP. They are used for text classification, sentiment analysis, machine translation, named entity recognition, text generation, and more. They provide a foundation for various downstream NLP tasks by representing words in a meaningful way.

Example:

Let's consider a simplified example of word embeddings with a small vocabulary and a two-dimensional vector space:

Vocabulary: ["king", "queen", "man", "woman"]

After training a word embedding model on a large corpus of text, the word vectors might look like this (in a simplified two-dimensional space):

- "king": [0.9, 0.8]

- "queen": [0.85, 0.75]

- "man": [0.1, 0.2]

- "woman": [0.05, 0.15]

In this example, we can observe that words with similar meanings, such as "king" and "queen," have vectors that are close together in the vector space. Similarly, "man" and "woman" are close to each other, indicating that they share semantic similarity.

Word embeddings allow NLP models to capture relationships between words, understand analogies (e.g., "king" is to "man" as "queen" is to...), and perform tasks that require semantic understanding of language. They are an essential tool for representing and processing text data efficiently and effectively in various NLP applications.

Word2Vec

Word2Vec is a popular and influential word embedding technique in Natural Language Processing (NLP). It was introduced by Tomas Mikolov and his team at Google in 2013. Word2Vec is designed to learn word embeddings by considering the context of words in a large corpus of text. It is known for its ability to capture semantic relationships between words and has become a fundamental tool in NLP applications.

Here's how Word2Vec works:

-

Word Context: Word2Vec operates on the assumption that words with similar meanings appear in similar contexts. It considers a sliding window of words in a sentence or text and focuses on the target word in the center of the window and its surrounding context words.

-

Learning Word Vectors: Word2Vec learns word vectors by training a neural network on a large corpus of text. It predicts the target word based on the context words (Continuous Bag of Words or CBOW), or it predicts the context words based on the target word (Skip-gram). The neural network is trained to minimize the prediction error.

-

Vector Space: The result of Word2Vec training is a set of word vectors placed in a continuous vector space. These vectors are typically high-dimensional (e.g., 100, 300 dimensions) and represent words such that words with similar meanings are closer together in the vector space.

-

Semantic Relationships: Word2Vec captures semantic relationships between words. For example, in the learned vector space, "king" - "man" + "woman" is closer to "queen." This demonstrates that Word2Vec can capture analogical relationships, such as "king" is to "man" as "queen" is to "woman."

-

Applications: Word2Vec word embeddings are used in various NLP applications, including text classification, sentiment analysis, machine translation, recommendation systems, and more. They provide a meaningful way to represent words for downstream tasks.

Example:

Consider a small corpus with the following sentences:

- "I love cats."

- "Dogs are friendly animals."

- "Cats and dogs are pets."

Let's use Word2Vec to create word embeddings for the words "cats," "dogs," and "pets." In this simplified example, we'll represent the vectors in a two-dimensional space:

After training, the word vectors might look like this:

- "cats": [0.9, 0.8]

- "dogs": [0.85, 0.75]

- "pets": [0.7, 0.6]

In this vector space, "cats" and "dogs" are close to each other because they often appear in similar contexts (related to pets). "Pets" is also close to "cats" and "dogs" because it is a broader category that includes them.

Word2Vec embeddings allow NLP models to understand and leverage the semantic relationships between words in textual data, making them valuable for various language understanding tasks.

FastText

FastText is an extension of the Word2Vec word embedding model, introduced by Facebook AI Research in 2016. While Word2Vec generates word embeddings at the word level, FastText operates at the subword level, making it capable of handling out-of-vocabulary words and capturing morphological information more effectively. It's particularly useful for languages with rich inflection and agglutination, where words can be composed of multiple meaningful subword units.

Here's how FastText works:

-

Subword Representation: Instead of treating words as atomic units, FastText breaks words into smaller subword units, such as character n-grams (e.g., "cat" is represented as "c," "ca," "cat") and learns embeddings for these subword units. This allows FastText to capture information about word morphology, prefixes, suffixes, and even rare or previously unseen words.

-

Learning Word Vectors: Like Word2Vec, FastText trains a neural network to predict context words based on target words or vice versa, using the subword representations. It learns embeddings for both subword units and complete words during training.

-

Vector Aggregation: Word vectors are aggregated by summing or averaging the vectors of their constituent subword units. This aggregated word vector represents the meaning of the entire word and can be used for various NLP tasks.

-

Applications: FastText word embeddings can be used in the same way as traditional word embeddings. They are valuable for text classification, sentiment analysis, document retrieval, and other NLP tasks. FastText is especially beneficial for handling languages with complex word forms and a high degree of inflection.

Example:

Let's consider a small corpus with the following sentences:

- "I love cats."

- "Dogs are friendly animals."

- "Cats and dogs are pets."

After training FastText on this corpus, we can generate subword embeddings for words like "cats," "dogs," and "pets" by summing the embeddings of their subword units. In this simplified example, we'll represent the vectors in a two-dimensional space:

Subword embeddings for "cats" might include:

- "cat": [0.3, 0.2]

- "cats": [0.3, 0.2] (sum of subword embeddings)

Subword embeddings for "dogs" might include:

- "dog": [0.4, 0.1]

- "dogs": [0.4, 0.1] (sum of subword embeddings)

Subword embeddings for "pets" might include:

- "pet": [0.25, 0.3]

- "pets": [0.25, 0.3] (sum of subword embeddings)

In this vector space, "cats" and "dogs" are close to each other because they share the concept of pets. "Pets" is also close to "cats" and "dogs."

FastText's ability to handle subword units makes it robust in capturing word meanings and morphological variations, making it a powerful tool for various NLP tasks across languages with diverse word structures.

GloVe

GloVe, which stands for Global Vectors for Word Representation, is a word embedding model introduced by researchers at Stanford University in 2014. It is a popular technique in Natural Language Processing (NLP) used to learn vector representations of words based on their co-occurrence statistics in a large corpus of text. GloVe embeddings are designed to capture both the syntactic and semantic meaning of words, making them useful for various NLP tasks.

Here's how GloVe works:

-

Word Co-Occurrence Matrix: GloVe starts by constructing a word co-occurrence matrix from the input text corpus. Each element \(X_{ij}\) of this matrix represents how often word \(i\) appears in the context of word \(j\). The context can be defined based on a fixed window size or some other criteria.

-

Objective Function: The core idea of GloVe is to learn word vectors by minimizing an objective function that quantifies the relationships between words in terms of their co-occurrence probabilities. The objective function is designed to make the dot product of word vectors approximate the logarithm of the word co-occurrence probabilities.

-

Training: GloVe employs gradient descent optimization to learn word vectors that minimize the objective function. During training, it adjusts the word vectors iteratively to achieve a better fit to the co-occurrence data.

-

Vector Space: The result of training is a set of word vectors placed in a continuous vector space. Each dimension of this vector space captures different linguistic properties and relationships between words. Words with similar meanings or contexts tend to have similar vectors in this space.

-

Applications: GloVe embeddings can be used in a wide range of NLP applications. They provide a dense, fixed-size representation of words, making them suitable for text classification, sentiment analysis, machine translation, information retrieval, and more.

Example:

Consider a simplified corpus with the following sentences:

- "I love natural language processing."

- "NLP is fascinating."

- "Text analysis and NLP are important."

After training GloVe on this corpus, we obtain word embeddings. In practice, these embeddings have hundreds of dimensions, but for simplicity, let's assume a two-dimensional space:

- "natural": [0.9, 0.8]

- "language": [0.7, 0.6]

- "processing": [0.6, 0.9]

In this vector space, "natural" and "language" are close to each other because they frequently co- occur in the corpus, indicating their semantic similarity. Similarly, "language" and "processing" are close due to their co-occurrence patterns.

GloVe embeddings are known for their ability to capture nuanced semantic relationships between words, and they have become a fundamental tool in many NLP tasks, contributing to the advancement of language understanding and text analysis.