Knowledge Representation and Reasoning

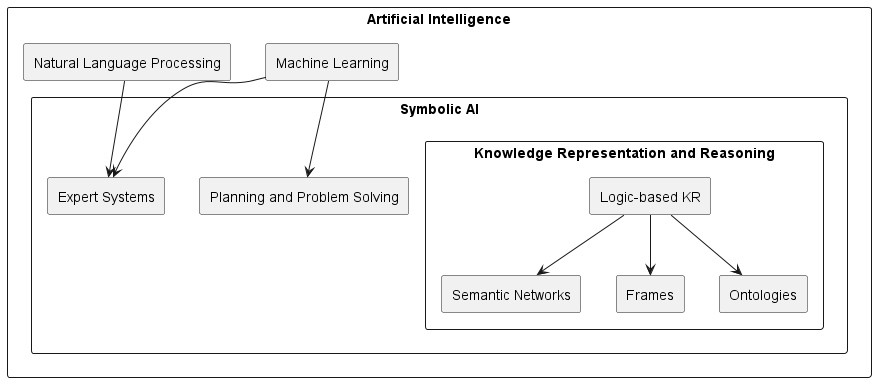

@fig:knowledge-representation-and-reasoning

Knowledge Representation (KR) refers to the process of encoding information in a manner that can be understood and processed by AI systems. It involves selecting appropriate structures and formalisms to represent knowledge, enabling machines to reason and make intelligent decisions. KR plays a vital role in bridging the gap between raw data and meaningful information, allowing AI systems to comprehend and utilize knowledge effectively.

Reasoning, on the other hand, is the process of drawing logical inferences from the represented knowledge. It involves using rules, algorithms, and logical frameworks to derive new information or make informed decisions based on existing knowledge. Reasoning mechanisms enable AI systems to perform tasks such as problem-solving, planning, decision-making, and even natural language understanding.

Knowledge Representation

As we delve into the world of knowledge representation, we're about to explore different ways to organize information. Think of it like putting puzzle pieces together to make sense of things. We'll start by looking at "Ontologies," which are like family trees for ideas, showing how they're connected. Then, we'll dive into "Logic-based representations," where we use rules to figure out new things from what we already know. "Semantic networks" will be our next stop, showing how ideas are linked like friends on social media. After that, we'll check out "Frames and Scripts," which are like templates for common situations and events. Lastly, we'll peek into "Probabilistic representations," which help us understand how likely something is to be true.

While some knowledge representation approaches like ontologies, logic-based representations, semantic networks, and frames are commonly used in Symbolic AI for explicit knowledge representation and rule-based reasoning, probabilistic representations are central to Machine Learning and Deep Learning for handling uncertainty and learning from data. The choice of representation depends on the problem's nature and the AI paradigm employed.

Ontologies

Ontologies are a distinguishing feature of Symbolic AI. They are formal representations of knowledge that capture the concepts, relationships, and properties within a specific domain. They provide a structured and organized way to represent knowledge, allowing AI systems to reason and infer new information. Ontologies are widely used in various applications, including semantic web, information retrieval, and knowledge-based systems.

Imagine you're building a digital library for all the books ever written. Each book talks about different topics, characters, and places. To make sense of all this information, you create an "ontology," which is like a giant map that shows how everything is related. In this case, your ontology would have categories for genres like mystery, romance, and science fiction. It would also connect characters to their roles, like detective or villain, and link places to their descriptions. This structured map helps your library's AI system organize, search, and understand the books better. So, when a user asks for books with detectives in a city setting, the AI can quickly find the right ones because it knows the relationships between concepts. Ontologies make your library smarter, just like they do for the semantic web, search engines, and other systems that need to manage complex information.

Ontologies act as detailed roadmaps that guide AI systems through the intricate landscape of knowledge. They not only define the individual concepts within a domain but also map out how these concepts relate to each other. Imagine an ontology as a family tree, where each concept is a family member and their connections illustrate relationships such as "is-a," "part-of," or "has-property." This rich web of connections empowers AI to navigate complex associations, making it more adept at answering questions and drawing insights.

One of the key strengths of ontologies lies in their ability to foster consistent understanding among both humans and machines. By setting up a common vocabulary and clear definitions, ontologies bridge the gap between different perspectives and interpretations. This standardized representation is particularly useful in scenarios where multiple parties collaborate or where data from diverse sources needs to be harmonized. Additionally, ontologies can accommodate the evolution of knowledge over time, allowing for updates and adjustments without disrupting the overall structure.

The applications of ontologies are far-reaching. In the realm of the semantic web, they serve as the backbone for sharing and linking data across various platforms. Information retrieval systems leverage ontologies to improve the accuracy and relevance of search results by understanding the context and relationships behind keywords. Knowledge-based systems, on the other hand, utilize ontologies to enable intelligent reasoning. When an AI system encounters new information, it can use the ontology to deduce logical conclusions and even suggest insights that might not be explicitly stated.

In essence, ontologies provide a powerful framework for encoding and navigating knowledge. Their ability to capture complex relationships and guide AI systems' reasoning makes them a cornerstone of modern knowledge representation, shaping the way machines understand and interact with the world's ever-expanding pool of information.

Logic-based Representations

Logic-based representations are a fundamental part of Symbolic AI. They use specific languages like propositional logic, first-order logic, or higher-order logic to express knowledge. These representations allow for precise and clear reasoning because they follow well-defined rules of inference. They are particularly valuable in areas where reasoning and deduction are crucial, like expert systems and automated theorem proving. Think of these languages as special codes that computers can understand to organize and interpret information. These representations act like building blocks, where each piece of information fits perfectly into its designated place. This approach ensures that there is no confusion or ambiguity, making the conclusions drawn from these representations reliable and solid. When we need to analyze complex situations and reach logical conclusions, logic-based representations prove to be extremely useful.

For instance, in expert systems – which are like digital experts in specific fields – logic- based representations play a crucial role. Imagine you're designing a medical expert system. You'd use logic-based representations to capture medical knowledge, like symptoms, diseases, and treatment options. By applying logical rules, the system can analyze the symptoms provided by a patient and deduce potential diseases, making the diagnostic process more efficient and accurate.

Another area where logic-based representations shine is in automated theorem proving. This might sound like something out of a sci-fi movie, but it's actually a fascinating field of computer science. Essentially, it involves computers using logic-based representations to prove mathematical theorems or validate complex mathematical statements. This has far-reaching implications, from ensuring the accuracy of software and hardware designs to advancing our understanding of mathematical concepts.

Imagine you're planning a vacation, and you want to use a logic-based representation to organize your travel preferences. You could use formal logic languages like propositional logic to break down your choices into clear statements. Let's say you want a vacation with both sunny weather and access to museums. You could represent "sunny weather" as the proposition A and "access to museums" as the proposition B. Now, you want a vacation that includes both A and B. In formal logic, this is expressed as A AND B. This logical representation ensures that your vacation plan follows a structured pattern, making sure that your destinations meet these criteria. If a travel option doesn't have both sunny weather (A) and access to museums (B), it doesn't fit the logical criteria you've set.

Using logic-based representations helps you avoid any mix-ups or misunderstandings. You're essentially creating a system where your vacation choices are like pieces of a puzzle, fitting together perfectly if they meet the logical conditions you've defined. This way, you can confidently choose your vacation spot based on logical conclusions drawn from your representation. Whether you're planning a trip or solving complex problems, logic-based representations provide a clear and dependable way to make sense of information and reach solid conclusions.

So, in a nutshell, logic-based representations are like the building blocks of clear and sound reasoning. They help us make sense of intricate information, making them an indispensable tool in various fields where precision and accuracy are paramount.

Semantic Networks

Semantic networks are another representation commonly used in Symbolic AI. They represent knowledge as a network of interconnected nodes, where each node represents a concept or an entity, and the edges represent relationships between them. Semantic networks provide a graphical and intuitive way to represent knowledge, making it easier for AI systems to reason and navigate through the knowledge graph. They are commonly used in natural language processing, knowledge-based systems, and cognitive modeling.

Imagine you're planning a trip to a new city, and you want to explore its attractions, transportation, and local cuisine. Now, think of a semantic network as a giant mind map that helps you organize all this information. In this network, each node represents a concept like "Tourist Attractions," "Public Transportation," and "Restaurants." The lines connecting these nodes are like paths that show how these concepts are related. For example, there would be edges connecting "Tourist Attractions" to specific places like "Historical Landmarks," "Museums," and "Parks." These edges represent the relationships between the concepts, helping you understand that historical landmarks are a type of tourist attraction.

In this travel scenario, the semantic network becomes your mental guide. It's like having a visual map of the city's knowledge, where you can quickly see how different ideas are linked. If you're looking for the best way to get around, you can follow the edges from "Public Transportation" to "Subway," "Buses," and "Taxis." This way, you're using the semantic network to navigate through the web of knowledge, just like an AI system does.

Going a bit deeper, let's unravel the intricacies of semantic networks. These networks work like a web of interconnected ideas, where each "node" is like a puzzle piece holding a specific concept or thing. These nodes could be as simple as "cat" or as complex as "space exploration." What makes them powerful is how they're linked together by "edges," like lines connecting puzzle pieces. These edges symbolize various relationships – things like "is a," "part of," "causes," and more. For instance, an edge might connect "cat" to "animal," showing the relationship that a cat is a type of animal. This web of nodes and edges creates a visual representation of how knowledge is related, and AI systems can follow these connections to gather insights.

Imagine using this idea network to understand text. When an AI reads a sentence like "Cats are cute pets," it can follow the link between "cats" and "animals," and also between "cats" and "cute." This helps the AI grasp the idea that cats are both animals and adorable. These networks are like roadmaps for AI reasoning – they help systems travel through the sea of information, making logical connections along the way.

Semantic networks find their place in various applications. In natural language processing, they assist machines in understanding the context of words and sentences, which is crucial for accurate language understanding and translation. Knowledge-based systems, which are like digital experts, use these networks to solve complex problems by navigating the web of relationships between concepts. Cognitive modeling, a field that aims to replicate human thought processes, employs semantic networks to simulate how humans think and make decisions based on interconnected ideas.

In essence, semantic networks are bridges that span the gap between human-like understanding and machine reasoning. They provide a tangible way for AI to grasp the intricate web of knowledge, opening doors to more sophisticated and context-aware interactions between humans and machines.

Frames and Scripts

Frames and scripts are used to represent structured knowledge in Symbolic AI. They break down knowledge into frames or scripts, with slots and fillers to capture information. Frames represent objects or concepts along with their attributes and relationships, while scripts represent sequences of events or actions.

Frames and scripts, these intriguing knowledge representation techniques, allow us to dive deeper into understanding specific situations and events. Let's start with frames – think of them as detailed templates that encapsulate information about particular objects, concepts, or scenarios. Each frame consists of slots that hold attributes or properties related to the concept. For instance, if we have a "car" frame, it might include slots for attributes like "color," "model," and "year." This structured framework enables us to quickly comprehend and infer details about the object.

Scripts, on the other hand, take us even further by capturing the dynamic aspect of knowledge – sequences of events or actions that typically unfold in a particular order. Picture scripts as mental blueprints for familiar scenarios, like going to a restaurant or watching a movie. Each script encompasses the steps involved and the roles of various participants. When we encounter a situation that matches a script, we effortlessly anticipate the next steps and expectations. Think of it like following a recipe – we know the steps to take and what to expect at each stage.

The strength of frames and scripts lies in their ability to model common-sense knowledge and reasoning about everyday situations. They help us make predictions, fill in missing information, and understand the relationships between various elements in a scenario. These techniques are vital for artificial intelligence systems aiming to grasp human-like comprehension of the world, as they capture the nuanced intricacies of how we interpret and navigate our surroundings. As we continue our journey through knowledge representation, keep in mind that frames and scripts are like the storytellers of the knowledge world, weaving together information to form cohesive narratives of reality.

Probabilistic Representations

Probabilistic representations are a cornerstone of Machine Learning and Deep Learning. They deal with uncertain or incomplete knowledge by assigning probabilities to different propositions. These representations enable AI systems to reason under uncertainty and make probabilistic inferences. Bayesian networks and Markov models are commonly used probabilistic representations in AI applications such as decision-making, pattern recognition, and machine learning.

Probabilistic representations take us into the realm of uncertainty and incomplete information, offering a way to handle situations where we're not completely sure about things. Imagine you're predicting the weather – you might not be 100% sure if it'll rain, but you can estimate the chances. That's what probabilistic representations do. They attach probabilities to different ideas or statements, showing how likely they are to be true. This is super useful for AI systems because it allows them to think and make decisions when things are a bit fuzzy.

One popular tool in this area is the "Bayesian network." It's like a map that shows how different pieces of information connect and influence each other. Let's say you're diagnosing a medical condition; you might not have all the test results, but you can use a Bayesian network to calculate the chances of different diseases based on the information you do have. Another tool, the "Markov model," is all about understanding patterns in sequences. Think of it like predicting the next word in a sentence – you consider the words that came before to make an educated guess.

These probabilistic representations play a significant role in AI applications like decision-making. Imagine a self-driving car trying to figure out if it's safe to change lanes on a busy highway – it uses probabilities to make a smart choice. Pattern recognition is another area where these representations shine. Let's say you're teaching a computer to recognize handwritten numbers; probabilistic models help it learn which features are more likely for each number. And in machine learning, these representations are the backbone of algorithms that learn from data, adapting and improving as they gather more information.

Reasoning

Reasoning in the context of Symbolic AI is a process of drawing logical inferences, making decisions, and solving problems using explicit knowledge representations, symbols, and rules. Each of the mentioned Knowledge Representation approaches in Symbolic AI employs different reasoning mechanisms to manipulate and derive knowledge from their respective representations:

Description Logic Reasoning

Deductive reasoning is exceptionally well-suited for ontologies due to its precision and reliance on formal logic. This reasoning approach ensures the consistency and accuracy of knowledge representation. Within ontologies, reasoning encompasses essential tasks such as subsumption (identifying if one class is a subset of another), consistency checking (ensuring logical coherence within the ontology), and instance classification (assigning individuals to specific classes). For example, in a medical ontology, it's employed to ascertain that a patient classified as "Diabetes" is also appropriately categorized as having a "Metabolic Disorder."

Deductive Reasoning

Logic-based reasoning seamlessly aligns with logic-based representations, harnessing the power of formal logic for effective deductive reasoning. This approach is exceptionally well-suited for manipulating explicit, rule-based knowledge. In logic-based systems, deductive reasoning involves the application of logical inference rules like modus ponens or resolution to derive new facts or validate hypotheses. Consider, for instance, a logic-based expert system for legal advice, which employs deductive reasoning to determine the legality of a specific action based on established legal principles.

Graph-based Reasoning

The marriage of graph-based reasoning with semantic networks offers an intuitive means to uncover relationships and patterns within knowledge representations. In the realm of semantic networks, reasoning unfolds as a process of traversing the graph using various algorithms, such as depth-first search or breadth-first search. This traversal facilitates the retrieval of pertinent information and the inference of relationships between conceptual nodes. To illustrate, a semantic network portraying a social network could employ graph-based reasoning to unearth shared connections between two individuals.

Template-based Reasoning

The affinity between template-based reasoning and frames and scripts lies in their shared structured approach to knowledge representation. Template-based reasoning harmonizes effortlessly with these representations, allowing for pattern matching and logical inferences rooted in predefined templates. Within frames and scripts, reasoning centers on matching incoming data to template structures, thereby invoking predefined actions or inferences based on these matched templates. As an example, consider a script detailing a restaurant visit, where template-based reasoning facilitates the sequencing of events during the dining experience.

Probabilistic Reasoning

Probabilistic reasoning emerges as the natural choice for handling probabilistic representations, adeptly modeling uncertainty and calculating probabilities. This reasoning method is ideally suited for scenarios where uncertainty or probabilistic knowledge prevails. Within probabilistic representations, reasoning encompasses tasks such as updating probabilities when new evidence surfaces, executing Bayesian inference, and ascertaining the most probable outcomes given observed data. For instance, in a medical diagnosis system, probabilistic reasoning quantifies the likelihood of a particular disease based on observed symptoms and prior probability distributions.