Image Segmentation

Write about "Image Segmentation" in the context of "Computer Vision" for a "Artificial Intelligence" book. Start with an introduction tailored to "Image Segmentation". Explain "Image Segmentation" and its related sub-topics in detail. Write code examples whenever applicable. Please write it in Markdown.

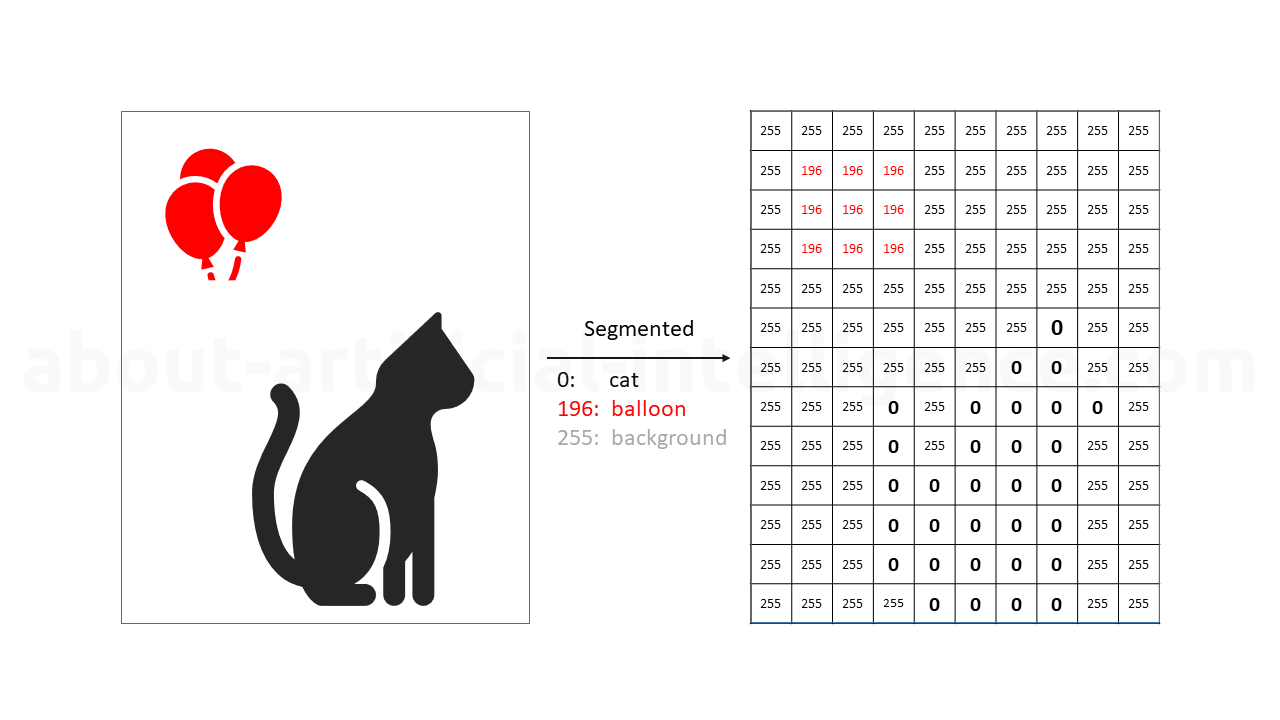

Image segmentation is the process of examining each pixel of an image to see if it belongs to an object of our interest. Image segmentation examines pixels and determines whether they belong to an object. Thus, segmentation is the grouping of related pixels into one group. The goal of image segmentation is to make a meaningful assignment of pixels to objects or to group different pixels into meaningful objects. To do this, we need to divide the image into areas. So, it's about separating the image objects from the background and delimiting the image objects from each other.

When we talk about semantic segmentation, we are talking about understanding an image at the pixel level, that is, assigning a label or category to each pixel of an image to identify and understand what is in the image at the pixel level. For example, if the image shows a woman riding a bicycle in front of a building on the street.

In the following picture, we see an example showing segmentation of a simple image:

@fig:image-segmentation

Semantic segmentation is beneficial for a wide range of tasks and applications. For example, with autonomous vehicles, we must provide vehicles with the essential perception to understand their surroundings in order for self-driving vehicles to be able to securely integrate into our existing road infrastructure. Another example is medical imaging, where the goal is to analyze and detect cancer-related anomalies in cells.

Nowadays, semantic segmentation has become a fundamental research area in computer vision as the number of applications is increasing. There are several methods for this purpose. The semantic segmentation algorithms can be classified into two categories based on the underlying feature extraction technique: classic feature-based classification methods and deep neural network-based methods, which has recently attracted a lot of attention because of its accuracy.

Thresholding

Thresholding is one of the simplest segmentation techniques. It involves selecting a threshold value and classifying each pixel in the image as either foreground or background based on whether its intensity is above or below the threshold. This approach works well for images with clear intensity differences between objects and background.

Thresholding is a binary segmentation method that converts an image into two regions, foreground and background, based on a specified threshold value. The threshold value is a critical parameter in this method and is usually determined by analyzing the histogram of the image. The histogram represents the distribution of pixel intensities in the image. The peak values in the histogram indicate the presence of different objects in the image. By selecting an appropriate threshold value, we can separate these objects from the background.

The process of thresholding is quite straightforward. For each pixel in the image, we compare its intensity value with the threshold. If the pixel's intensity is greater than the threshold, we classify it as a foreground pixel. Otherwise, it is classified as a background pixel. This results in a binary image where the foreground pixels are typically represented by white (intensity value of 255) and the background pixels by black (intensity value of 0).

Let's consider a simple example. Suppose we have a grayscale image of a letter 'A' on a white background. The pixel intensity values for the letter 'A' are around 50, and for the white background, they are around 200. If we set the threshold value to 100, all the pixels with intensity values less than 100 (i.e., the letter 'A') will be classified as foreground, and the rest as background. Thus, we can effectively segment the letter 'A' from the background.

However, it's important to note that thresholding is not always effective. It works best when there is a clear distinction in intensity values between the objects of interest and the background. In cases where this distinction is not clear, or where the lighting conditions vary across the image, thresholding may not yield satisfactory results. In such scenarios, more advanced segmentation techniques may be required.

Region Growing

Region growing is a region-based segmentation method. It starts with seed pixels and grows regions by adding neighboring pixels that have similar properties (e.g., intensity, color, texture) until a stopping criterion is met. It is effective for segmenting objects with homogeneous properties.

Region growing is a pixel-based image segmentation method that is also classified as a region-based image segmentation method. The primary idea behind this technique is to select a seed, or a starting point, and then examine the neighboring pixels. If these pixels are similar based on a predefined criterion, they are added to the region and the process continues. The region keeps growing until no more pixels can be added, which is determined by the stopping criterion.

The stopping criterion can be defined in various ways. It could be a predefined number of pixels, a certain statistical measure (like mean or variance), or a specific intensity value. The criterion is usually set based on the specific application and the nature of the images being processed.

The region growing method is particularly effective in situations where the objects of interest have homogeneous properties. For instance, in a grayscale image, a region of interest might be a group of pixels with similar intensity values. In a color image, a region might be defined by similar color values.

Let's consider a simple example to illustrate the concept of region growing. Suppose we have a grayscale image of a sky with clouds. We want to segment the image into two regions: sky and clouds. We could start by selecting a seed pixel in a cloud. This pixel has a certain intensity value. We then look at the neighboring pixels. If a neighboring pixel has an intensity value within a certain range of the seed pixel, we add it to the cloud region. We continue this process, adding pixels to the cloud region until no more neighboring pixels meet the criterion. We then select a new seed pixel and repeat the process to identify the sky region.

In conclusion, region growing is a simple yet effective image segmentation method in computer vision. It is particularly useful when the objects of interest in an image have homogeneous properties. However, the success of this method heavily depends on the selection of the seed points and the definition of the stopping criterion.

Edge Detection

Edge-based segmentation methods detect boundaries or edges between different objects in an image. Techniques like the Canny edge detector identify abrupt changes in intensity or color, which often correspond to object boundaries.

Edge detection is a fundamental tool used in most image processing applications to obtain information from the frames as a precursor step to feature extraction and object segmentation. This technique is used to identify the edges or boundaries of objects within an image, which helps in distinguishing different objects from each other and from the background.

The principle behind edge detection is that local changes in an image often signify important events. These changes are abrupt variations in pixel intensity which characterize the boundaries of objects in a scene. Edge detection algorithms work by enhancing these changes and making the locations of the edges more apparent.

One of the most popular and effective edge detection algorithms is the Canny edge detector. Named after its creator, John F. Canny, it is a multi-stage algorithm that involves several steps. The first step is to smooth the image to eliminate noise. This is followed by finding the image gradient to highlight regions with high spatial derivatives. The algorithm then tracks along these regions and suppresses any pixel that is not at the maximum (non-maximum suppression), which helps to suppress potential false positives. Finally, hysteresis is used to track along the remaining pixels that have not been suppressed. Hysteresis uses two thresholds and if the magnitude is below the first threshold, it is set to zero (made a non-edge). If the magnitude is above the high threshold, it is made an edge. And if the magnitude is between the two thresholds, then it is set to zero unless there is a path from this pixel to a pixel with a gradient above the second threshold.

To illustrate this, let's consider a simple example. Suppose we have an image of a soccer ball on a green field. The ball is white and the field is green. The edge detection algorithm would identify the boundary of the ball by detecting the abrupt change in color from white (the ball) to green (the field). The result would be an image highlighting the circular boundary of the ball.

In conclusion, edge detection plays a crucial role in image segmentation and helps in the extraction of useful features from an image. It is a fundamental step in many computer vision tasks, including object detection and recognition, and scene understanding.

Clustering

Clustering algorithms like K-means or mean-shift can be applied to group pixels with similar properties into clusters. Each cluster represents a segment in the image. Clustering methods can be used for color-based or feature-based segmentation.

Clustering is a method of unsupervised learning and a common technique for statistical data analysis used in many fields, including machine learning, pattern recognition, image analysis, information retrieval, and bioinformatics. In the context of image segmentation, clustering is used to partition an image into multiple segments or 'clusters', where each cluster consists of pixels with similar attributes.

The primary goal of clustering in image segmentation is to categorize all pixels in the image into a set of clusters, where pixels in the same cluster have similar color or feature characteristics. This process helps to reduce the complexity of the image and makes it easier to analyze and understand.

One of the most popular clustering algorithms used in image segmentation is the K-means algorithm. K-means is a centroid-based algorithm, where the aim is to minimize the distance between the data points and the center of the cluster, also known as the centroid. The algorithm works by initializing 'K' centroids randomly, where 'K' is the number of clusters desired. Each pixel in the image is then assigned to the nearest centroid, and the centroid's position is recalculated based on the mean value of the assigned pixels. This process is repeated until the centroids no longer move or the assignments no longer change.

Let's consider a simple example of using K-means for image segmentation. Suppose we have an image of a green apple on a white background. The image is composed of thousands of pixels, each with different color intensities. If we want to segment the image into two parts - the apple and the background, we can use K-means clustering to achieve this. We set K=2, indicating that we want to divide the image into two clusters. The K-means algorithm will then assign each pixel in the image to one of the two clusters based on the color intensity of the pixel. After several iterations, the algorithm will converge, and we will have two clusters. One cluster will represent the green apple, and the other will represent the white background. Thus, the image is segmented into two parts using K-means clustering.

In conclusion, clustering is a powerful tool in image segmentation, providing a simple yet effective method to divide an image into multiple segments based on pixel characteristics. It is widely used in various applications, including object recognition, image compression, and image editing.

Graph-Based Segmentation

Graph-based segmentation methods represent an image as a graph, where pixels are nodes, and edges represent relationships between pixels. Algorithms like the Minimum Spanning Tree (MST) or Normalized Cuts partition the graph into segments by optimizing certain criteria, such as minimizing inter-segment differences and maximizing intra-segment similarities.

In graph-based segmentation, the image is viewed as a weighted, undirected graph. Each pixel in the image corresponds to a node in the graph, and each edge connects two neighboring pixels. The weight of an edge is typically defined as a function of the difference in intensity, color, or texture between the two pixels it connects. The goal of graph-based segmentation is to partition the graph into several sub-graphs, each representing a segment of the image.

One of the most popular graph-based segmentation methods is the Minimum Spanning Tree (MST) approach. The MST of a graph is a tree that spans all the nodes in the graph and has the minimum possible total edge weight. In the context of image segmentation, the MST approach starts by constructing the MST of the graph representing the image. Then, it removes the edges with the highest weights, resulting in several disconnected sub-graphs, each representing a segment of the image. The intuition behind this approach is that the edges with the highest weights connect pixels that are very different and therefore likely belong to different segments.

Another popular graph-based segmentation method is the Normalized Cuts approach. This method aims to partition the graph into several sub-graphs such that the total weight of the edges connecting different sub-graphs is minimized, and the total weight of the edges within each sub-graph is maximized. This is equivalent to minimizing the normalized cut cost, which is a measure of the dissimilarity between different segments and the similarity within each segment.

Let's consider a simple example. Suppose we have a grayscale image with 9 pixels arranged in a 3x3 grid. The intensity of each pixel is represented by a number from 0 (black) to 255 (white). The image looks like this:

We can represent this image as a graph with 9 nodes and 24 edges (each pixel is connected to its 4 neighbors). The weight of each edge is the absolute difference in intensity between the two pixels it connects. For example, the edge connecting the pixel with intensity 50 and the pixel with intensity 100 has a weight of 50.

If we apply the MST approach to this graph, we start by constructing the MST. In this case, the MST is the same as the original graph because all the edges have the same weight. Then, we remove the edges with the highest weights. Since all the edges have the same weight, we can remove any edge. If we remove the edges connecting the center pixel to its 4 neighbors, we end up with 5 segments: the center pixel and the 4 corners.

If we apply the Normalized Cuts approach to this graph, we aim to partition the graph into several sub-graphs such that the total weight of the edges connecting different sub-graphs is minimized, and the total weight of the edges within each sub-graph is maximized. In this case, the optimal partition is the same as the one obtained with the MST approach: the center pixel and the 4 corners.

Active Contours (Snakes)

Active contours, also known as snakes, are flexible curves that can deform to fit object boundaries. They are iteratively adjusted to minimize an energy function that combines image-based and contour- based terms. Snakes are useful for object boundary delineation.

Active contours or snakes are a method of image segmentation that is particularly effective in situations where the object to be segmented is somewhat isolated from the rest of the image by a clear, nearly unbroken boundary. The term "snake" derives from the way these algorithms work, which is reminiscent of a snake moving by continuously curving and flexing its body.

The fundamental idea behind active contours is the minimization of energy functions. The energy function is typically composed of two main components: the internal energy and the external energy. The internal energy is related to the contour's shape, such as its smoothness or length, and it controls the tension and rigidity of the snake. The external energy is derived from the image data and drives the snake towards the object boundaries.

The process of active contour modeling can be visualized as a rubber band stretched around an object, which contracts to fit tightly around the object. The rubber band represents the active contour, and the force that makes it contract is computed from the image data.

Let's consider a simple example. Suppose we have an image of a circular object against a uniform background, and we want to delineate the boundary of this object. We could initialize a snake as a circle somewhere within the object. The external energy derived from the image will push the snake towards the object boundary, while the internal energy will prevent it from bending too much. As we iteratively update the snake by minimizing the energy function, it will gradually deform and move towards the object boundary, until it finally settles on the boundary.

In practice, the energy minimization is usually achieved using optimization techniques such as gradient descent. The snake is represented as a set of points, and the positions of these points are iteratively updated to reduce the energy.

Active contours have been widely used in various applications, such as object tracking in video sequences, medical image analysis, and shape recognition. However, they also have some limitations. For example, they often require manual initialization and may not perform well when the object boundary is not well defined or when there is noise in the image. Despite these challenges, active contours remain a powerful tool in the field of image segmentation.

Deep Learning

Deep learning techniques, particularly Convolutional Neural Networks (CNNs), have revolutionized image segmentation in recent years. Fully Convolutional Networks (FCNs), U-Net, and Mask R-CNN are popular architectures for image segmentation tasks. These models learn to map input images to pixel- wise segmentations, making them highly effective for a wide range of segmentation challenges.

Deep learning is a subset of machine learning, which in turn is a subset of artificial intelligence. It is called 'deep' learning because it makes use of deep neural networks – models inspired by the human brain, with many layers of artificial neurons. These layers enable the model to learn complex patterns from large amounts of data.

In the context of image segmentation, deep learning models can learn to identify and separate different objects within an image. This is a significant advancement over traditional image processing techniques, which often require manual feature extraction and are less effective at handling complex scenes.

Convolutional Neural Networks (CNNs) are a type of deep learning model that are especially good at processing images. They are designed to automatically and adaptively learn spatial hierarchies of features from the input image. CNNs have been successful in identifying faces, objects and traffic signs apart from powering vision in robots and self-driving cars.

For instance, let's consider a simple example of image segmentation using deep learning. Suppose we have an image of a park, and we want our model to identify and separate different elements in the image, such as trees, people, and benches. We would train a deep learning model (like a CNN) on a large dataset of park images, where each pixel of each image has been labeled as 'tree', 'person', 'bench', or 'background'.

The model would learn to recognize the visual features associated with each of these categories. Then, when presented with a new park image, the model would be able to predict the category of each pixel, effectively segmenting the image into trees, people, benches, and background. This is a simplified example, but it gives a sense of how deep learning can be used for image segmentation.

In conclusion, deep learning has brought about significant advancements in image segmentation, enabling more accurate and automated analysis of images. As the field continues to evolve, we can expect even more sophisticated techniques and applications to emerge.

Semantic Segmentation

Semantic segmentation is a variant of image segmentation where each pixel is assigned a class label representing the object or category it belongs to. This is commonly used in tasks like scene understanding and autonomous driving.

Semantic segmentation is a crucial step in the process of image understanding, as it allows machines to perceive images in a manner similar to human vision. It is a pixel-wise classification technique that assigns a specific label to every pixel in the image, thereby dividing the image into several segments that share common characteristics. These segments represent different objects or parts of objects, and each segment is associated with a specific class or category.

The primary goal of semantic segmentation is to simplify and/or change the representation of an image into something that is more meaningful and easier to analyze. It is a high-level task that models the entire scene, providing a comprehensive understanding of the image's context.

For instance, consider an image of a park. A semantic segmentation model would not only identify and separate the different objects in the image, such as trees, benches, people, and grass, but it would also assign each pixel of these objects to their respective classes. This means that every pixel belonging to a tree would be labeled as 'tree', every pixel belonging to a bench would be labeled as 'bench', and so on.

This process is particularly useful in applications like autonomous driving, where understanding the context of the scene is crucial for safe navigation. For example, an autonomous vehicle equipped with a camera and a semantic segmentation model can identify the road, other vehicles, pedestrians, and traffic signs in real-time. This information can then be used to make decisions, such as when to stop, accelerate, or turn.

Semantic segmentation, however, is not without its challenges. The main challenge lies in accurately classifying each pixel, especially in complex scenes with multiple overlapping objects, varying lighting conditions, and different perspectives. Despite these challenges, advancements in deep learning and convolutional neural networks have significantly improved the performance of semantic segmentation models, making them an integral part of many computer vision systems.

Instance Segmentation

Instance segmentation extends semantic segmentation by distinguishing between individual instances of the same class. It assigns a unique label to each distinct object instance, allowing for precise object localization and tracking.

In semantic segmentation, all the pixels belonging to a particular class are labeled with the same identifier. For instance, in an image containing multiple cars, semantic segmentation would label all the cars as 'car', making it impossible to distinguish between individual cars. However, instance segmentation takes this a step further. It not only identifies the pixels that belong to a 'car', but also differentiates between 'car1', 'car2', 'car3', and so on. This is particularly useful in scenarios where we need to track individual objects over time or across different frames.

To illustrate this, let's consider a simple example. Imagine a busy street scene with several pedestrians. In this scenario, semantic segmentation would label all the pedestrians as 'person', making it impossible to track the movement of a specific individual across the street. However, with instance segmentation, each pedestrian would be assigned a unique identifier. This would allow us to track the path of each individual separately, even if they are of the same class (i.e., 'person').

Instance segmentation is a more complex task than semantic segmentation as it requires the model to differentiate between objects of the same class. This is typically achieved by training the model on a large dataset of annotated images, where each object instance has been manually labeled with a unique identifier. The model learns to recognize the unique features of each object instance and uses this knowledge to assign unique labels to new object instances in unseen images.

In conclusion, instance segmentation plays a crucial role in many computer vision applications, including autonomous driving, video surveillance, and robotics, where it is essential to track individual objects over time. By assigning a unique label to each object instance, instance segmentation allows for precise object localization and tracking, thereby enabling more sophisticated and accurate object interaction and behavior analysis.

Hybrid Approaches

Some segmentation tasks benefit from combining multiple approaches. For instance, combining region- based and edge-based methods or using pre-processing techniques like superpixel generation followed by traditional segmentation algorithms.

Hybrid approaches in image segmentation are a blend of different techniques that aim to leverage the strengths of each method while mitigating their weaknesses. These approaches are particularly useful in complex scenarios where a single method may not provide satisfactory results.

One common hybrid approach is the combination of region-based and edge-based methods. Region-based methods work by grouping pixels or sub-regions based on certain attributes such as color or texture. Edge-based methods, on the other hand, focus on identifying abrupt changes in pixel intensity, which usually correspond to object boundaries. By combining these two methods, we can achieve a more comprehensive segmentation that captures both the homogeneity within regions and the distinct boundaries between different objects.

Another popular hybrid approach involves the use of pre-processing techniques like superpixel generation followed by traditional segmentation algorithms. Superpixels are clusters of pixels that share similar attributes and are perceived as a coherent entity. They serve as a more meaningful primitive than individual pixels and can significantly reduce the computational complexity of subsequent segmentation tasks. Once the image is divided into superpixels, traditional segmentation algorithms like watershed or graph cuts can be applied to further partition the image.

Let's consider a simple example of hybrid image segmentation in the context of autonomous driving. In this scenario, we need to segment an image into different regions representing the road, vehicles, pedestrians, and other elements in the scene. A single method may not be sufficient due to the complexity and variability of the scene.

We could start with a superpixel generation step to divide the image into coherent clusters. This would help to reduce the complexity of the image and provide a more meaningful representation for the subsequent steps. Next, we could apply a region-based method to group the superpixels based on their color or texture, which could help to distinguish the road from the vehicles and pedestrians. Finally, an edge-based method could be used to refine the boundaries of the segmented regions and ensure a more accurate delineation of the different objects in the scene.

In conclusion, hybrid approaches offer a flexible and powerful tool for image segmentation. By combining different methods and techniques, they can handle a wide range of scenarios and deliver more accurate and robust results.