Storage

Volumes

Docker has a concept of volumes, though it is somewhat looser and less managed. A Docker volume is a directory on disk or in another container. Docker provides volume drivers, but the functionality is somewhat limited.

A volume is just a directory, which is accessible to the Containers in a Pod.

To use a volume, a Pod specifies

what volumes to provide for the Pod:

* .spec.volumes

where to mount those into Containers: * .spec.containers.volumeMounts

The volume can be of different types:

- hostPath

- awsElasticBlockStore

- gcePersistentDisk

- azureDisk

- azureFile

- gitRepo

- glusterfs

- Local

hostPath

A hostPath volume mounts a file or directory from the host node's filesystem into your Pod.

Example of volume in POD:

apiVersion: v1

kind: Pod

metadata:

name: my-app

labels:

run: my-app

spec:

containers:

- name: ..

image: …

volumeMounts:

- mountPath: "/path/in/container"

name: app-volume

volumes:

- name: app-volume

hostPath:

# directory location on host

path: /storage/path

# this field is optional

type: Directory

awsElasticBlockStore

An awsElasticBlockStore volume mounts an Amazon Web Services (AWS) EBS volume into your pod.

Note: You must create an EBS volume by using aws ec2 create-volume or the AWS API before you can use it.

There are some restrictions when using an awsElasticBlockStore volume:

- the nodes on which pods are running must be AWS EC2 instances

- those instances need to be in the same region and availability zone as the EBS volume

- EBS only supports a single EC2 instance mounting a volume

Before you can use an EBS volume with a pod, you need to create it.

Example of gcePersistentDisk volume in POD:

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

containers:

- name: ..

image: …

volumeMounts:

- mountPath: "/path/in/container"

name: aws-app-volume

volumes:

- name: aws-app-volume

# This AWS EBS volume must already exist.

awsElasticBlockStore:

volumeID: "<volume id>"

fsType: ext4

gcePersistentDisk

A gcePersistentDisk volume mounts a Google Compute Engine (GCE) persistent disk (PD) into your Pod.

Note: You must create a PD using gcloud or the GCE API or UI before you can use it.

There are some restrictions when using a gcePersistentDisk:

- the nodes on which Pods are running must be GCE VMs

- those VMs need to be in the same GCE project and zone as the persistent disk

Before you can use a GCE persistent disk with a Pod, you need to create it.

Example of gcePersistentDisk volume in POD:

apiVersion: v1

kind: Pod

metadata:

name: test-pd

spec:

containers:

containers:

- name: ..

image: …

volumeMounts:

- mountPath: "/path/in/container"

name: gce-app-volume

volumes:

- name: gce-app-volume

# This GCE PD must already exist.

gcePersistentDisk:

pdName: my-data-disk

fsType: ext4

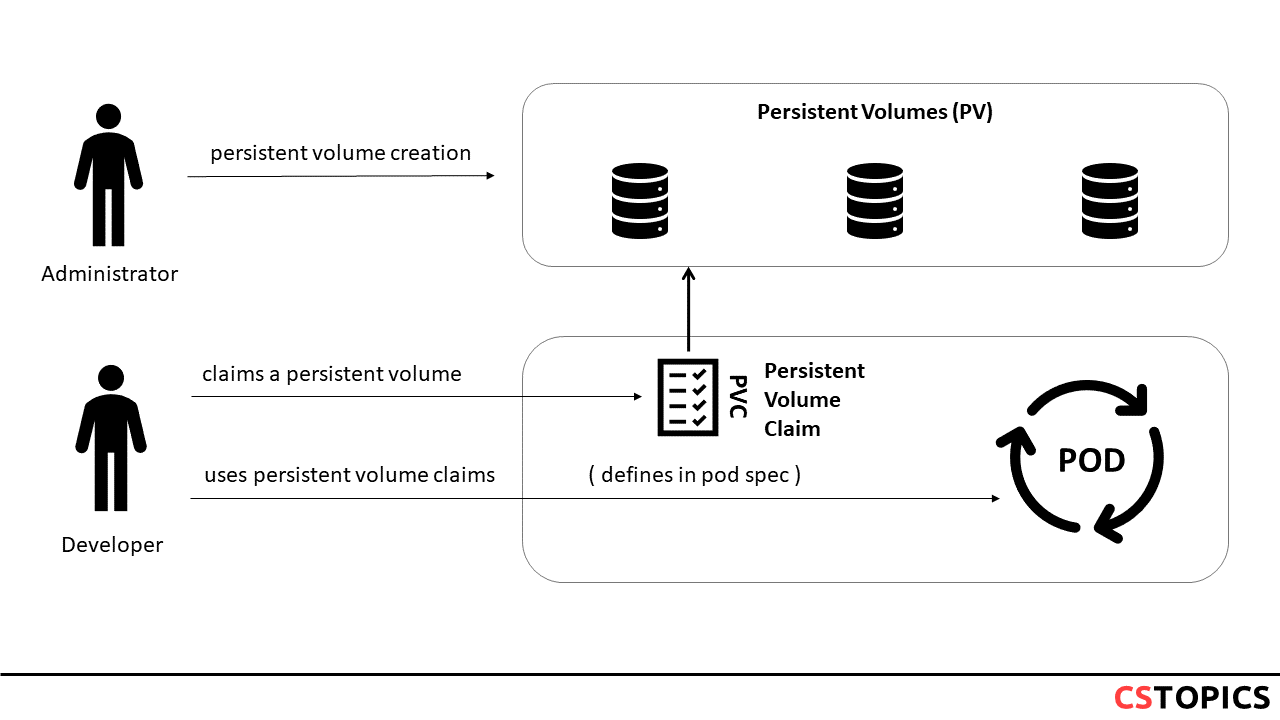

Persistent Volumes

Managing storage is a distinct problem from managing compute instances. The PersistentVolume subsystem provides an API for users and administrators that abstracts details of how storage is provided from how it is consumed. To do this, we introduce two new API resources: PersistentVolume and PersistentVolumeClaim.

A PersistentVolume (PV) is a piece of storage in the cluster that has been provisioned by an administrator or dynamically provisioned using Storage Classes.

A PersistentVolumeClaim (PVC) is a request for storage by a user. It is similar to a Pod. Pods consume node resources and PVCs consume PV resources. Pods can request specific levels of resources (CPU and Memory). Claims can request specific size and access modes.

The access modes are:

- ReadWriteOnce -- the volume can be mounted as read-write by a single node

- ReadOnlyMany -- the volume can be mounted read-only by many nodes

- ReadWriteMany -- the volume can be mounted as read-write by many nodes

Each PV contains a spec and status, which is the specification and status of the volume.

apiVersion: v1

kind: PersistentVolume

metadata:

name: foo-pv

spec:

storageClassName: ""

claimRef:

name: foo-pvc

namespace: foo

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Recycle

...

Class

A PV can have a class, which is specified by setting the storageClassName attribute to the name of a StorageClass. A PV of a particular class can only be bound to PVCs requesting that class. A PV with no storageClassName has no class and can only be bound to PVCs that request no particular class.

Reclaim Policy

Current reclaim policies are:

- Retain -- manual reclamation

- Recycle -- basic scrub ("rm -rf /thevolume/*")

- Delete -- associated storage asset such as AWS EBS, GCE PD, Azure Disk, or OpenStack Cinder volume is deleted

Phase

A volume will be in one of the following phases:

- Available -- a free resource that is not yet bound to a claim

- Bound -- the volume is bound to a claim

- Released -- the claim has been deleted, but the resource is not yet reclaimed by the cluster

- Failed -- the volume has failed its automatic reclamation

PersistentVolumeClaims

Each PVC contains a spec and status, which is the specification and status of the claim.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: foo-pvc

namespace: foo

spec:

storageClassName: "" # Empty string must be explicitly set otherwise default StorageClass will be set

volumeName: foo-pv

...

Claims As Volumes

Pods access storage by using the claim as a volume. Claims must exist in the same namespace as the Pod using the claim. The cluster finds the claim in the Pod's namespace and uses it to get the PersistentVolume backing the claim. The volume is then mounted to the host and into the Pod.

kind: Pod

apiVersion: v1

metadata:

name: mypod

spec:

containers:

- name: myfrontend

image: dockerfile/nginx

volumeMounts:

- mountPath: "/var/www/html"

name: mypd

volumes:

- name: mypd

persistentVolumeClaim:

claimName: foo-pvc

ConfigMaps

A ConfigMap is an API object used to store non-confidential data in key-value pairs. Pods can consume ConfigMaps as environment variables, command-line arguments, or as configuration files in a volume.

A ConfigMap allows you to decouple environment-specific configuration from your container images, so that your applications are easily portable.

There are four different ways that you can use a ConfigMap to configure a container inside a Pod:

- Inside a container command and args

- Environment variables for a container

- Add a file in read-only volume, for the application to read

- Write code to run inside the Pod that uses the Kubernetes API to read a ConfigMap

apiVersion: v1

kind: ConfigMap

metadata:

name: game-demo

data:

# property-like keys; each key maps to a simple value

player_initial_lives: "3"

ui_properties_file_name: "user-interface.properties"

# file-like keys

game.properties: |

enemy.types=aliens,monsters

player.maximum-lives=5

user-interface.properties: |

color.good=purple

color.bad=yellow

allow.textmode=true

Secret

A Secret is an object that contains a small amount of sensitive data such as a password, a token, or a key.

Secrets are much like ConfigMap (hold key-value pairs) * Use ConfigMap for non-sensitive data * Use Secrets for sensitive data

Users can create secrets, and the system also creates some secrets.

To use a secret, a pod needs to reference the secret.

A secret can be used with a pod in two ways: * As files in a volume mounted on one or more of its containers. * As container environment variable. * By the kubelet when pulling images for the Pod.

Service Accounts Automatically Create and Attach Secrets with API Credentials.

Types of Secret

When creating a Secret, you can specify its type using the type field of a Secret resource, or certain equivalent kubectl command line flags (if available). The type of a Secret is used to facilitate programmatic handling of different kinds of confidential data.

| Builtin Type | Usage |

|---|---|

| Opaque | arbitrary user-defined data |

| kubernetes.io/service-account-token | service account token |

| kubernetes.io/dockercfg | serialized ~/.dockercfg file |

| kubernetes.io/dockerconfigjson | serialized ~/.docker/config.json file |

| kubernetes.io/basic-auth | credentials for basic authentication |

| kubernetes.io/ssh-auth | credentials for SSH authentication |

| kubernetes.io/tls | data for a TLS client or server |

| bootstrap.kubernetes.io/token | bootstrap token data |

Exercise

- $ echo -n “QAYXSWEDC" > ./apikey.txt

- $ kubectl create secret generic apikey --from-file=./apikey.txt

- $ kubectl describe secrets/apikey

- $ kubectl apply -f pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: consumesec

spec:

containers:

- name: shell

image: centos:7

command:

- "bin/bash"

- "-c"

- "sleep 10000"

volumeMounts:

- name: apikeyvol

mountPath: "/tmp/apikey"

readOnly: true

volumes:

- name: apikeyvol

secret:

secretName: apikey

- $ kubectl exec -it consumesec -c shell -- bash

- $ kubectl delete pod/consumesec secret/apikey